Journal Name: Journal of Multidisciplinary Research and Reviews

Article Type: Review

Received date: 30 September, 2019

Accepted date: 18 October, 2019

Published date: 25 October, 2019

Citation: Kikuchi A, Kikuchi I (2019) Computational Algebraic Geometry and Quantum Mechanics: An Initiative toward Post Contemporary Quantum Chemistry. J Multidis Res Rev Vol: 1, Issu: 2 (47-79).

Copyright: © 2019 Kikuchi A. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Abstract

A new framework in quantum chemistry has been proposed recently (“An approach to first principles electronic structure calculation by symbolic-numeric computation” by A. Kikuchi). It is based on the modern technique of computational algebraic geometry, viz. the symbolic computation of polynomial systems. Although this framework belongs to molecular orbital theory, it fully adopts the symbolic method. The analytic integrals in the secular equations are approximated by the polynomials. The indeterminate variables of polynomials represent the wave-functions and other parameters for the optimization, such as atomic positions and contraction coefficients of atomic orbitals. Then the symbolic computation digests and decomposes the polynomials into a tame form of the set of equations, to which numerical computations are easily applied. The key technique is Gröbner basis theory, by which one can investigate the electronic structure by unraveling the entangled relations of the involved variables. In this article, at first, we demonstrate the featured result of this new theory. Next, we expound the mathematical basics concerning computational algebraic geometry, which are necessitated in our study. We will see how highly abstract ideas of polynomial algebra would be applied to the solution of the definite problems in quantum mechanics. We solve simple problems in “quantum chemistry in algebraic variety” by means of algebraic approach. Finally, we review several topics related to polynomial computation, whereby we shall have an outlook for the future direction of the research.

Keywords

Quantum mechanics, Algebraic geometry, Commutative algebra, Grönber basis, Primary ideal decomposition, Eigenvalue problem in quantum mechanics, Molecular orbital theory; Quantum chemistry, Quantum chemistry in algebraic variety, First principles electronic structure calculation, Symbolic computation, symbolic-numeric solving, Hartree-Fock theory, Taylor series, Polynomial approximation, Algebraic molecular orbital theory.

Abstract

A new framework in quantum chemistry has been proposed recently (“An approach to first principles electronic structure calculation by symbolic-numeric computation” by A. Kikuchi). It is based on the modern technique of computational algebraic geometry, viz. the symbolic computation of polynomial systems. Although this framework belongs to molecular orbital theory, it fully adopts the symbolic method. The analytic integrals in the secular equations are approximated by the polynomials. The indeterminate variables of polynomials represent the wave-functions and other parameters for the optimization, such as atomic positions and contraction coefficients of atomic orbitals. Then the symbolic computation digests and decomposes the polynomials into a tame form of the set of equations, to which numerical computations are easily applied. The key technique is Gröbner basis theory, by which one can investigate the electronic structure by unraveling the entangled relations of the involved variables. In this article, at first, we demonstrate the featured result of this new theory. Next, we expound the mathematical basics concerning computational algebraic geometry, which are necessitated in our study. We will see how highly abstract ideas of polynomial algebra would be applied to the solution of the definite problems in quantum mechanics. We solve simple problems in “quantum chemistry in algebraic variety” by means of algebraic approach. Finally, we review several topics related to polynomial computation, whereby we shall have an outlook for the future direction of the research.

Keywords

Quantum mechanics, Algebraic geometry, Commutative algebra, Grönber basis, Primary ideal decomposition, Eigenvalue problem in quantum mechanics, Molecular orbital theory; Quantum chemistry, Quantum chemistry in algebraic variety, First principles electronic structure calculation, Symbolic computation, symbolic-numeric solving, Hartree-Fock theory, Taylor series, Polynomial approximation, Algebraic molecular orbital theory.

Introduction

Dear Readers,

If you are researchers or students with the expertise of physics or chemistry, you might have heard of “algebraic geometry” or “commutative algebra”. Maybe you might have heard only of these words, and you might not have definite ideas about them, because these topics are taught in the department of mathematics, not in those of physics and chemistry. You might have heard of advanced regions of theoretical physics, such as super-string theory, matrix model, etc., where the researchers are seeking the secret of the universe by means of esoteric theories of mathematics with the motto algebraic geometry and quantum mechanics. And you might be desperate in imagining the required endurance to arrive at the foremost front of the study... However, algebraic geometry is originated from rather a primitive region of mathematics. In fact, it is an extension of analytic geometry which you must have learned in highschools. According to Encyclopedia Britannica, the definition of the word goes as follows:

algebraic geometry, study of the geometric properties of solutions of polynomial equations, including solutions in three dimensions beyond three...

It simply asserts that algebraic geometry is the study of polynomial systems. And polynomial is ubiquitous in every branch of physics. If you attend the lecture of elementary quantum mechanics, or you study quantum chemistry, you always encounter secular equations in order to compute the energy spectrum. Such equations are actually given by the polynomial systems, although you solve them through linear algebra. Indeed, linear algebra is so powerful that you have almost forgotten that you are laboring with polynomial algebraic equations.

Be courageous! Let us have a small tour in the sea of QUANTUM MECHANICS with the chart of ALGEBRAIC GEOMETRY. Your voyage shall never be in stormy and misty mare incognitum. Having chartered a cruise ship, the “COMMUTATIVE ALGEBRA”, we sail from a celebrated seaport named “MOLECULAR ORBITAL THEORY”.

The molecular orbital theory [1] in the electronic structure computation of quantum chemistry is computed by the solution of the secular equation, if one adopts the localized atomic orbital basis defined on the set of atoms at the positions with other optimizable variables :

in which the matrix elements are defined by

and

In these expressions, is the Hamiltonian; the vector represents the coefficients in LCAO wave-function ; E is the energy spectrum. The Fock matrix H and the overlap matrix S are, in theory, computed symbolically and represented by the analytic formulas with respect to the atomic coordinates and other parameters included in the Gaussian- or Slater- type localized atomic basis; they are, in practice, given numerically and the equation is solved by means of linear algebra. In contrast to this practice, it is demonstrated by Kikuchi [2] that there is a possibility of symbolic-numeric computation of molecular orbital theory, which can go without linear algebra: the secular equation is given by the analytic form and approximated by the polynomial system, which is processed by the computational algebraic geometry. The symbolic computation reconstructs and decomposes the polynomial equations into a more tractable and simpler form, by which the numerical solution of the polynomial equation is applied for the purpose of obtaining the quantum eigenstates. The key technique is the Gröbner basis theory and triangulation of polynomial set. Let us review the exemplary computation of hydrogen molecule in [2].

Let , , be the wavefunctions, the atomic charges, and the atomic positions. The total energy functional of Hartree-Fock theory is given by

The secular equation is derived from the stationary condition with respect to the wave-function

The energy minimum with respect to the atomic coordinate gives the stable structure:

Let us consider the simple hydrogen, as in Figure 1.

Figure 1: The image of a hydrogen molecule, composed of two atoms.

Assume that the two hydrogen atoms are placed at and . By the simplest model, we can choose the trial wave-functions for up- and down- spin at the point as follows:

Where

and are the real variables to be determined. Let ev and ew to be Lagrange multipliers for and . Since these two wave-functions are orthogonal in nature, due to the difference of spin, we assume that the multipliers are diagonal: for .

From these expressions, all of the integrals involved in the energy functional are analytically computed. (As for the analytic forms of the integrals, see the supplement of [2].) Then, with respect to inter-atomic distance , Taylor expansion of the energy functional is computed up to degree four, at the center of . The polynomial approximation is given by

OMEGA = (3571 - 1580*a^2 - 3075*a*b - 1580*b^2 - 1580*c^2 + 625*a^2*c^2 + 1243*a*b*c^2 + 620*b^2*c^2 - 3075*c*d + 1243*a^2*c*d + 2506*a*b*c*d + 1243*b^2*c*d - 1580*d^2

.......................................................................................................... .......................................................................................................... ..........................................................................................................

- 86*a*b*c*d*r^4 - 17*b^2*c*d*r^4 + 12*d^2*r^4 - 4*a^2*d^2*r^4 - 17*a*b*d^2*r^4 + 13*a*b*ev*r^4 + 13*c*d*ew*r^4)/1000

where the inter-atomic distance is represented by r (for the convenience of polynomial processing).

The equations used in the study are quite lengthy, so we only show the part of them. We give the exact ones in the appendix (supplementary material): the energy functional in Appendix A; the secular equations in Appendix B; the Gröbner bases in Appendix C; the triangulation in Appendix D.

In order to reduce the computational cost, the numerical coefficients are represented by the fraction, by the truncation of decimal numbers. We make the change of variables from to in the following way:

Consequently, the wave-functions are represented by the sum of symmetric and anti-symmetric parts:

The stationary conditions , , , , with respect to t, s, u, v, yields those equations:

S[1]32*s*u*v*r^4-336*s*u*v*r^3+992*s*u*v*r^2-160*s*u*v*r

.......................................................

+126*t*r^4-882*t*r^3+1748*t*r^2+1896*t*r-12470*t=0

S[2]156*s*u^2*r^4-1068*s*u^2*r^3+2248*s*u^2*r^2-80*s*u^2*r

.......................................................

+992*t*u*v*r^2-160*t*u*v*r+40*t*u*v=0

S[3]156*s^2*u*r^4-1068*s^2*u*r^3+2248*s^2*u*r^2-80*s^2*u*r

.......................................................

+126*u*r^4-882*u*r^3+1748*u*r^2+1896*u*r-12470*u=0

S[4]-52*s^2*v*r^4+236*s^2*v*r^3-32*s^2*v*r^2-104*s^2*v*r

.......................................................

-30*v*r^4+330*v*r^3-1148*v*r^2+760*v*r-170*v=0

The stationary conditions , , with respect to ev, ew, are the normalization condition for the wave-functions. They yield two equations:

S[5]-13*s^2*r^4+139*s^2*r^3-458*s^2*r^2+63*s^2*r

.......................................................

-63*t^2*r-3986*t^2+1000=0

S[6]13*u^2*r^4-139*u^2*r^3+458*u^2*r^2-63*u^2*r

.......................................................

+63*v^2*r-14*v^2+1000=0

The stable condition for the molecular geometry δΩ/δr yields this:

312*s^2*u^2*r^3-1602*s^2*u^2*r^2+2248*s^2*u^2*r

.......................................................

.......................................................

+380*v^2+740*r^3-3903*r^2+7288*r-5102=0

For simplicity, however, we replace the last equation with a simple one (of the fixed inter-atomic distance) as follows:

S[7] 5*r-7=0.

It fixes the inter-atomic distance at 1.4.

By processing polynomials S[1],...,S[7], We obtain 18 polynomials in the Grönber basis J[1],...,J[18]. We only show the skeletons of them, because the coefficients are too lengthy. The exact form is given in the appendix.

J[1]=r-1.4

J[2]=(...)*ew^6+(...)*ew^5+(...)*ew^4

+(...)*ew^3+(...)*ew^2+(...)*ew+(...)

J[3]=(...)*ev+(...)*ew^5+(...)*ew^4+(...)*ew^3

-(...)*ew^2-(...)*ew+(...)

J[4]=(...)*v*ew^4+(...)*v*ew^3+(...)*v*ew^2

-(...)*v*ew-(...)*v

J[5]=(...)*v^2-(...)*ew^5-(...)*ew^4-(...)*ew^3

-(...)*ew^2+(...)*ew-(...)

J[6]=(...)*u*ew^4+(...)*u*ew^3+(...)*u*ew^2

+(...)*u*ew+(...)*u

J[7]=(...)*u*v*ew^2+(...)*u*v*ew+(...)*u*v

J[8]=(...)*u^2+(...)*ew^5+(...)*ew^4+(...)*ew^3

+(...)*ew^2-(...)*ew-(...)

J[9]=(...)*t*ew^4+(...)*t*ew^3+(...)*t*ew^2

+(...)*t*ew+(...)*t

J[10]=(...)*t*v*ew^3+(...)*t*v*ew^2+(...)*t*v*ew+(...)*t*v

J[11]=(...)*t*u*ew^3+(...)*t*u*ew^2+(...)*t*u*ew+(...)*t*u

J[12]=(...)*t^2-(...)*ew^5-(...)*ew^4-(...)*ew^3

+(...)*ew^2+(...)*ew-(...)

J[13]=(...)*s*ew^2+(...)*s*ew-(...)*s-(...)*t*u*v*ew-(...)*t*u*v

J[14]=(...)*s*v*ew-(...)*s*v-t*u*ew^2-(...)*t*u*ew-(...)*t*u

J[15]=(...)*s*u*ew+(...)*s*u+(...)*t*v*ew^2+(...)*t*v*ew+(...)*t*v

J[16]=(...)*s*u*v+(...)*t*ew^3+(...)*t*ew^2+(...)*t*ew+(...)*t

J[17]=(...)*s*t-(...)*u*v*ew-(...)*u*v

J[18]=(...)*s^2+(...)*ew^5+(...)*ew^4+(...)*ew^3

-(...)*ew^2-(...)*ew-(...)

The triangular decomposition to the Gröbner basis is computed, which contains five decomposed sets of equations T[1],..., T[5]. Here the only the skeleton is presented, while the details are given in the appendix. Observe that one decomposed set includes seven entries; from the first entry to the last, the seven variables are added one by one, with the order of r, ew, ev, v, u, t, s, in the arrangement of a triangle. Now we can solve the equation by determining the unknown variables one by one. As a result, the triangular decomposition yields four subsets of the solutions of equations: the possible electronic configurations are exhausted, as is shown in Table 1.

Table 1: This table shows the solutions for the secular equation after the triangulation. The electron 1 and 2 lie in the up- and down- spin respectively; and the result exhausts the possible four congurations of the ground and the excited states.

| Solution 1 | Solution 2 | Solution 3 | Solution 4 | |

|---|---|---|---|---|

| t | -0.53391 | 0.00000 | -0.53391 | 0.00000 |

| s | 0.00000 | -1.42566 | 0.00000 | -1.42566 |

| u | -0.53391 | -0.53391 | 0.00000 | 0.00000 |

| v | 0.00000 | 0.00000 | -1.42566 | -1.42566 |

| ev | -0.62075 | -0.01567 | -0.62734 | 0.01884 |

| ew | -0.62075 | -0.62734 | -0.01567 | 0.01884 |

| r | 1.40000 | 1.40000 | 1.40000 | 1.40000 |

| The total energy | -1.09624 | -0.49115 | -0.49115 | 0.15503 |

| electron 1 | symmetric | asymmetric | symmetric | asymmetric |

| electron 2 | symmetric | symmetric | asymmetric | asymmetric |

T[1]:

_[1]=r-1.4

_[2]=(...)*ew+(...)

_[3]=(...)*ev+(...)

_[4]=v

_[5]=(...)*u^2-(...)

_[6]=t

_[7]=(...)*s^2-(...)

T[2]:

_[1]=r-1.4

_[2]=ew-(...)

_[3]=ev-(...)

_[4]=v^2-(...)

_[5]=u

_[6]=t

_[7]=(...)*s^2-(...)

T[3]:

_[1]=r-1.4

_[2]=ew^2+(...)*ew+(...)

_[3]=ev-ew

_[4]=v^2-(...)*ew-(...)

_[5]=u^2+(...)*ew+(...)

_[6]=t^2+(...)*ew+(...)

_[7]=s+(...)*t*u*v*ew+(...)*t*u*v

T[4]:

_[1]=r-1.4

_[2]=ew+(...)

_[3]=ev+(...)

_[4]=v

_[5]=u^2-(...)

_[6]=t^2-(...)

_[7]=s

T[5]:

_[1]=r-1.4

_[2]=ew+(...)

_[3]=ev+(...)

_[4]=v^2-(...)

_[5]=u

_[6]=t^2-(...)

_[7]=s

This is one of the featured results in [2]. The author of that work had demonstrated the procedure of the computation in a factual way, but he had not explained the underlying mathematical theory so minutely. Consequently, it is beneficial for us to grasp some prerequisites of commutative algebra and algebraic geometry because these theories are still strange to a greater part of physicists and chemists. In the following sections, we review concepts of commutative algebra and algebraic geometry, which are utilized in this sort of computation. Next, we learn about Grönbner bases. We will find that the “primary ideal decomposition” in commutative algebra surrogate the eigenvalue problem of linear algebra. Then we apply our knowledge to solve simple problems of molecular orbital theory from the standpoint of polynomial algebra. In the end, we take a look at the related topics which shall enrich the molecular orbital theory with a taste of polynomial algebra, such as “polynomial optimization” and “quantifier elimination”. The employment of these methods will show the course of future studies.

Basics of Commutative Algebra and Algebraic Geometry

Our handy tool is polynomial and our chief concern is how to solve the set of polynomial equations. Such topics are the themes of commutative algebra. If we would like to do with geometrical view, the task lies also in algebraic geometry. From this reason, in this section, we shall review mathematical definitions and examples related to the theory of polynomials.

N. B.: The readers should keep in mind that the chosen topics are mere “thumbnails” to the concepts of profound mathematics. As for proofs, the readers should study from more rigorous sources; for instance, for commutative algebra, the book by Eisenbud [3] or the Book by Reid [4]; for algebraic geometry, the book by Perrin [5], for Gröbner bases, the works by Cox, Little, and O’Shea [6,7], the book by Becker and Weispfenning [8], or the book by Ene and and Herzog [9].

Polynomial and ring

A polynomial should be defined in a ring. A ring is composed by the coefficient (in some field) and the indeterminate variables. Let K be the coefficient field. The polynomial ring is the K-vector space, with the basis elements (monomials) of the form

We define the degree of a monomial by

The degree of polynomial is given by

A homogeneous polynomial is a polynomial, in which every non-zero monomial has the same degree.

Example 4.1

- is a homogeneous polynomial of degree 3.

- is not homogeneous.

Example 4.2

(Homogenization) A non-homogeneous polynomial is homogenized by means of an additional variable .

For

Ideal

Definition 4.1 An ideal in the polynomial ring is the set of polynomials, which is closed under these operation:

- and then

- and then .

We usually denote an ideal by the generators, such as or .

The sum and the product of the ideals are defined by

The ideal quotient is defined to be the ideal

For two ideals I and m, the saturation is defined by

(Observe that for I < j. In many cases, we do not have to consider the union of infinite number of ideal quotients, as the extension by union with shall “saturates” and stop to grow at some finite i, if the ring is Noetherian, as will be discussed later.)

The radical of an ideal I is defined by

Affine algebraic set (Affine algebraic variety)

Definition 4.2. Let S be the subset of a ring . We denote the common zeros of polynomials in S by

We call the affine algebraic set (or affine variety) defined by

Example 4.3 In

,

.

Example 4.4 In

,

Example 4.5 In

,

.

Example 4.6 In

,

.

Also, these properties of the affine algebraic set are notable.

- If , .

- A point is an affine algebraic set:

- If then

- The intersection of affine algebraic sets is also an affine algebraic set:

- Or

- The finite union of affine algebraic sets is also an affine algebraic set:

The interpretation of the saturation in algebraic geometry is this: the saturation is the closure (in the sense of topology) of the complement of in .

Definition 4.3 Let be a subset of (where k is an arbitrary field). The ideal of is defined as

In other words, is the set of polynomial functions which vanish on .

Example 4.7 .

Example 4.8 For the ideal

the saturation is given by

is the composure of the curve and the doubled line . The saturation removes the line from that composure; the point (which lies on ), however, is not removed, because the saturation is the closure.

The ideal of an affine algebraic set of a ideal I, denoted by , is computable.

Example 4.9 For , and . Observe that the . This is the consequence of the famous theorem of Hilbert (Nullstellensatz), which we will learn later.

Residue class ring or quotient ring

We can define “residue class rings”. Let be an ideal in a ring R, and f is an element in R. The set is “the residue class of f modulo I”; f is a representative of the residue class . We denote the set of residue classes modulo I by . It also has the ring structure through addition and multiplication. Several resources use the term “quotient ring” or “factor ring” for the same mathematical object. In general, an ideal depicts geometric objects, which might be discrete points or might be connected and lie in several dimensions. They are represented by the residue class ring:

Let us see several examples.

Example 4.10 The elements in the ring are the polynomial . We divide by , so that

We divide by , so that

The residue is the representative of when it is mapped into the residue class ring. We might assume that (the representative of in the residue class ring) would not be an undetermined variable, but a certain number (outside of ) such that .

Example 4.11 .

We assume that the representatives and of and in the residue class ring would not be undetermined variables, but a pair of numbers and such that , and that depicts a unit circle, as a one-dimensional geometric object in .

Example 4.12

The representative and of and are considered to the points or (which lie in the intersection of and ). This example is a simplest case of zero-dimensional ideal.

Prime and primary ideal

There are two fundamental types of ideal: prime ideal and primary ideal.

Definition 4.4 An ideal I ( ) is prime, if , and, if , then or .

Definition 4.5 An ideal I is primary, if , and, if , then or for some ; that is to say, every zero divisor of is nil-potent.

Example 2.13 is a prime ideal.

Example 4.14 P= is a primary ideal: and are zero divisors in ; and .

Example 4.15 Every prime ideal is primary.

In the affine algebraic set, these two properties are equivalent.

- Ideal is a prime ideal.

- is irreducible.

The correspondence between variety and ideal

In order to unify the algebraic and geometric views, let us review the correspondence between varieties and ideals. There are correspondences:

and

Where the ideal and the variety are defined in the previous sections.

Also there are one-to-one correspondences:

Example 4.16 For the ideal , . We have .

Example 4.17 For the non-prime ideal , .

When we compute or analyze for an ideal , it might be convenient for us to work with or its irreducible component because the defining ideal would be simpler. The principle of “divide and conquer” is equally effective in symbolic computation.

Noetherian ring

Definition 4.6 A ring is Noetherian when it is finitely generated.

This means that there is no infinite ascending sequence of ideals: if there is an ascending sequence of ideals, such that

it terminates somewhere in the following sense:

Example 4.18 and are Noeterian rings. If there is a computational process in these rings which would create the ascending sequence of ideals, it must terminate after finite number of steps.

Example 4.19 If R is a Noetherian ring, then is Noetherian (as the consequence of the Hilbert basis theorem) [3]. By induction, is also a Noetherian ring.

Example 4.20 The power series ring is a Noetherian ring.

Example 4.21 If R is a Noetherian ring and I is a two-sided ideal (such that for , and ), then the residue class ring is also Noetherian. In other words, the image of a surjective ring homomorphism of a Noetherian ring is Noetherian.

Dimension and Krull dimension

Let X be a topological space. The dimension of X is the maximum of the lengths of the chains of irreducible closed subsets of X. The chain is the relation of inclusion as this:

We have seen that for a prime ideal P, the affine algebraic set is an irreducible closed subset. Hence we define a kind of dimension related to prime ideals. For a prime ideal , we can construct the chain of prime ideals with length n of the form

with prime ideals ,..., . The chain is not necessarily unique, and we denote the maximum of the length of chains by height ( ). The Krull dimension of a ring is the maximal length of the possible chains of prime ideals in . We denote it by dimk(A)

Recall that for two prime ideals such that , ; the inclusion is reversed.

Example 4.22 We have dimk ℝ[x_1,x_2,...,x_n]=n, because the maximal chain of primes ideals is given by

One often refers to the Krull dimension of the residue class ring by “dimension of the ideal I”. The smallest prime ideal ideal is I itself (when I is prime) or a minimal including I (as one might study a non-prime ideal I), and the dimension counting ascends from as the start-point.

Example 4.23 Consider in . This ideal is not prime. In order to count the dimension of the ideal I, the chain of primes is given by or , since and are the minimal prime ideals over . Hence .

Example 4.24 Let f be a polynomial in the ring of dimension n, (say, ). We assume that f is not the zero divisor, i.e. there is no element such that and that it is not invertible, namely, there is no element g∈S such that . Then has dimension n-1. A simple example is , which is the line defined by the equation .

These examples seem to be trivial but demand us a certain amount of technical proof to show the validity of the statements. (See the argument in [5].)

Zero-dimensional ideal

As we have seen, an ideal I in a ring is defined by the set of polynomials. The points , which are the zero of the generating polynomials, determine the affine algebraic set . If the affine algebraic set is a discrete set, the ideal I is said to be “zero-dimensional”. If we have to solve the set of polynomials, we have to work with the zero-dimensional ideal, where we shall find the solution as the discrete set of points. It is fairly difficult to find the zero-set for general cases. Hence it is important to foresee whether an ideal is zero-dimensional or not. The criterion for an ideal to be zero-dimensional is given by Gröbner basis theory, which we shall see later.

Nullstellensatz

Assume that k is an algebracally closed field.

Theorem 4.1 Weak Nullstellensatz Let be an ideal (different from itself), then is nonempty.

Theorem 4.2 (Nullstellensatz) Let , then .

Spectrum of ring and Zariski topology

As the set of polynomials define the geometric objects, we can adopt a view of geometry. In this section, we see a bit of it.

We say that a variety is irreducible when it is not empty and not the union of two proper sub-varieties, namely, .

For a prime ideal P in a ring S, is irreducible.

For a ring , we define the ring spectrum by

Spec(A) = {prime ideals of A}

For there is a one-to-one correspondence between Spec( ) and irreducible sub-varieties of .

Every maximal ideal (hence being prime) in with an algebraically closed field (such as ) and an ideal I has the form

for some point . (Notice that, in case of , .)Hence there is a one-to-one correspondence

by means of

The Zariski topology of a variety is defined by assuming that the sub-varieties are the closed sets, whereby the union and the intersection of a family of variety are also closed sets. For an algebraically closed field , these two statements are valid.

- (i) Any decreasing chain of varieties in eventually terminates.

- (ii) Hence any non-empty set in has a minimal element.

A variety which satisfies the descending chain condition for closed subsets is called to be Noetherian. Observe that the chain for the Noetherian varieties is descending, while the chain for the ideals is ascending when we have defined the Noetherian ring.

We define another type of topology in Spec( ): the Zariski topology of Spec( ), in which the closed sets are of the form

Two types of Zariski topology for varieties and Spec( ) have similar properties. The comparison is given in Chapter 5 of the book of Reid [4].

Unique factorization domain

Definition 4.7 An integral domain is a non-zero commutative ring in which the product of any two non-zero elements is non-zero.

Example 4.25 and are integral domains.

Definition 4.8 A unique factorization domain (UFD) is an integral domain in which any nonzero element has a unique factorization by the irreducible elements in the ring.

Example 4.26 is a UFD.

Example 4.27 For a field , is a UFD.

Example 4.28 For a UFD , is a UFD. By induction, is a UFD.

By the last example, and are UFDs. Hence any polynomial in these rings has unique factorization. However there are a lot of example which is not a unique factorization domain.

Example 4.29 is not a UFD, since it permits two different factorization for one element: .

Completion: formal description of Taylor expansion

In the computation of molecular orbital theory through computer algebra, as is presented in the introduction, we approximate the energy function by polynomials. We simply replace the transcendental functions in the energy functional (such as or with the corresponding formal power series, and we truncate these series at the certain degree. For this purpose, we execute Taylor expansion for the variable at a certain center point in the atomic distance. If we increase the maximum degree in Taylor expansion toward the infinity, the computation would converge to that by the exact analytic energy functional. Such a circumstance could be represented in a formal language of mathematics.

We need several definitions in order to present the formal description of Taylor expansion.

For a ring S, a “filtration” by the powers of a proper ideal I would be written as follows:

The sequence is given by the inclusion relation. The filtration determines the Krull topology of I-adic topology. An “inverse system” is a set of algebraic objects , which is directed by the index J with an order . In the inverse system, we have a family of map (homomorphism),

for all i ≤ j

and

i ≤ j ≤ k

Let and . The is the ideal in , generated by the monomials of degree in . We also assume that the inclusion is given in the sense of ideal so that ideals generated by monomials of lower degrees should contain those generated by monomials of higher degrees. We set by the quotient rings. Hence the entries in are the finite polynomials, in which the monomials in are nullified, and are represented by the set of polynomials up to degree . The operation of the map from to is to drop the monomials of higher degrees and to shorten polynomials. In case of the polynomial approximation by means of Taylor expansion, the map is the projection from finer to coarser approximations.

The inverse system can be glued together as a mathematical construction, which is called the “inverse limit” (or “projective limit”). The inverse limit is denoted and defined as

The inverse limit A has the natural projection (by which we can extract ).

The inverse limit is a “completion” of the ring with respect to , when the inverse limit is taken for the quotient rings in the following way:

is the ring of formal power series , and we can arbitrarily approximate the formal power series by some finite polynomials through the natural projection.

Example 4.30 Consider . Let and . is the set of polynomials of the form The Taylor expansion up to degree , , lies in . In the inverse limit of , namely, , the formal power series of , is defined.

We can assume the formal power series in would represent the inverse limit of “glued” Taylor expansions. In the algebraic formulation of molecular orbital theory, by means of inverse limit, we can bundle the different level of polynomial approximation with respect to the maximum polynomial degrees. Hence the natural map π means the model selection.

Such a mathematical formality might appear only to complicate the matter in practice, but it is important to introduce a neat “topology” in theory. The topology is constructed as follows: an object (i.e. a polynomial) s in a ring S has the nested (or concentrated) neighborhoods in ; the open basis of the neighborhoods is generated by the powers of a proper ideal and represented as

We say “open basis” in the sense of topology. If the reader is unfamiliar with topology, simply image that polynomials around a polynomial are sieved into different classes, which are represented by the above form. The powers of the ideal serve as the indicator of the distance between polynomials. In the terminology of topology, the completion makes a “complete” topological space. If we consider the inverse system for Taylor expansions at a point , the formal neighborhoods of should be small enough so that Taylor series should be convergent.

Example 4.31 Consider the map from to and the corresponding inverse system. Now and . A polynomial in has the open basis of neighborhoods, which is given by the set of the form

The extension to the multivariate case in is straightforward.

We interpret the neighborhoods of in the slightly different view. Any polynomial has the image in each of . Hence there are the different classes of polynomials around , which are represented by nil-potent ε as follows,

likewise,

Localization

Let be a ring of polynomial functions. We can consider the rational function (or the fraction) for . This sort of fraction is determined locally on a subset in , such that . For a fixed point , we define the subset of such that

Then, for the pair in , denoted , the fraction can be well-defined, although it is a “local function”. The set of fractions must be closed under multiplication and addition. Moreover the equivalence relation between two pairs in is given by

In commutative algebra, “localization” is defined in a more general way.

Definition 4.9 Let be a ring.

- A subset is “multiplicatively closed” if and for all .

- For and its multiplicatively closed subset , the equivalence relation between two elements in is given by

Then the set of equivalence classes

is the localization of at the multiplicatively closed subset S. It is a ring with addition and multiplication,

and

Example 4.32 Let P be a prime ideal in a ring . As the set is multiplicatively closed, we localize by S. It is usually denoted by and called the localization of R at the prime ideal P. This localization has the exactly one maximum ideal . In particular, for and , is the set of rational functions well defined around .

Example 4.33 Let be a commutative ring and let be a non-nilpotent element in . A multiplicatively closed subset is given by . The localization .

Example 4.34 Let (an affine algebraic set defined by the ideal ). Consider the ring . For a point in -axis, the function is equal to zero. So and should be equivalent as the elements in with . Indeed and in , although in . Hence the equivalence between and is proved. (This argument is not valid for , because at that point and it is not in .)

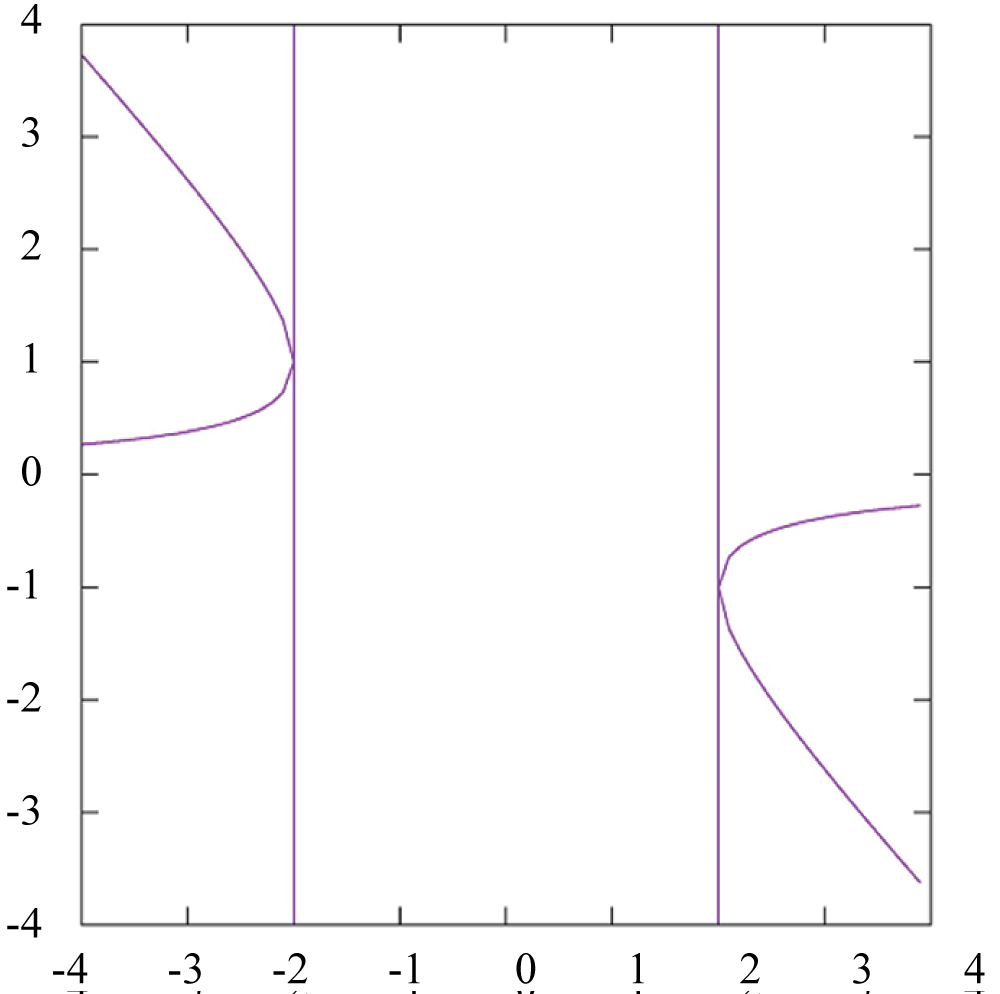

Example 4.35 Consider the secular equation

The roots are given by , and they lie in the affine algebraic set by the ideal in . Let and . Since is represented by another basis set (by a Gröbner basis with lexicographic order ) as it follows that

because is not in and it is an invertible element in . The ideal describes “how the affine algebraic set looks like” locally around the point . Indeed, the eigenvalue is the root of the determinant and the parabola looks like locally around .

Integrality

Consider the extension of quotient ring

We say is integral over when it satisfies the following condition:

Definition 4.10 Let be a ring extension. An element is integral over if it satisfies a monic polynomial equation with for all .

If every element in is integral over , then is integral over . We say that is the integral extension of . Then these statements hold:

Then these statements hold:

- If are integral over , is integral over .

- The succession of integral extensions makes the integral extension. If is integral over and is integral over S, then is integral over .

Example 4.36 is not an integral extension. From the geometrical viewpoint, the correspondence by this map (by inverting the direction) gives us the projection of the hyperbola to -axis.

There is no point in which is projected to the point in -axis, while any other points in -axis have a preimage in .

Example 4.37 Apply the change of coordinates to the above example: and . Then we obtain , which is an integral extension. From the geometrical viewpoint, the correspondence between two rings gives us the projection to the hyperbola to -axis. One always finds two points in , namely, , which are projected to an arbitrary point s in s-axis. The change of coordinates with polynomial maps of this sort (which might be non-linear) enables us to obtain an integral extension of a ring, even if the domain of the map is not an integral extension (Noether-normalization) [2].

Example 4.38 Consider the ideal . represents a simple secular equation .

is not an integral extension. In fact, can be represented by another basis , but there is no monic equation for . One cannot find the point in which is projected to the non-zero vector unless e takes certain particular values (the eigenvalues). This example is an application of integrality check by means of Gröbner basis. (This useful idea will be expounded later.)

Normalization

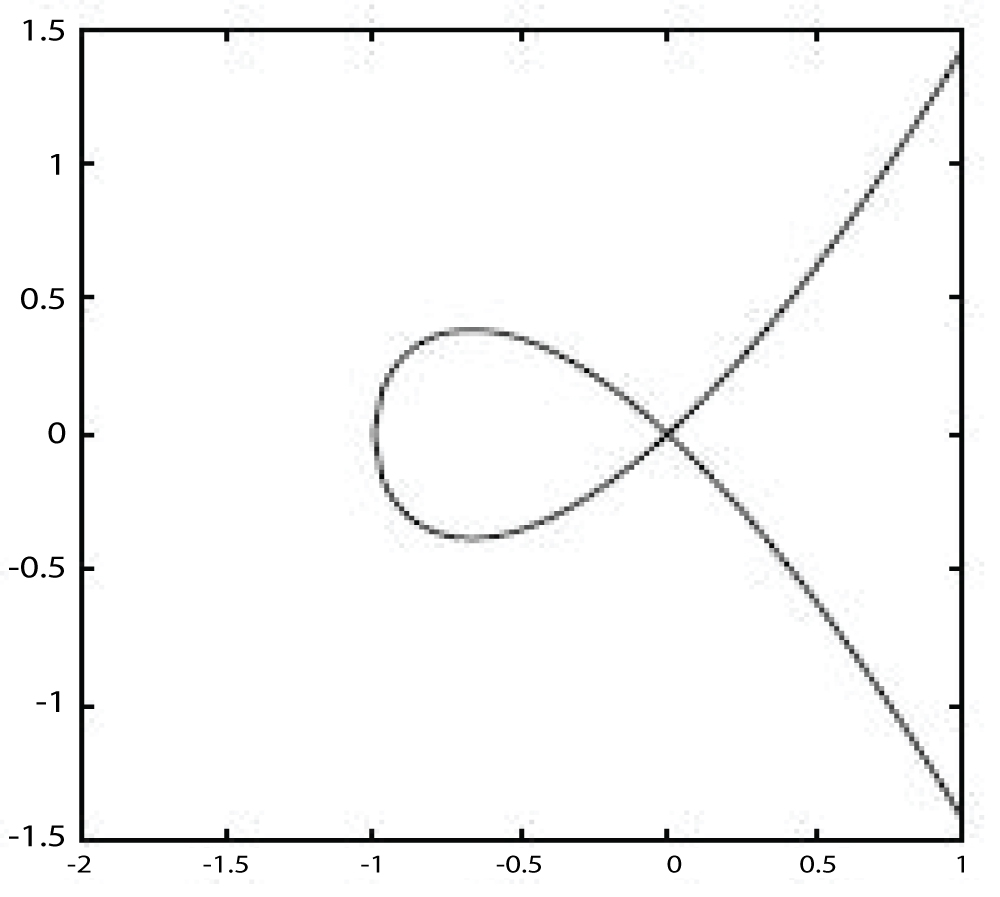

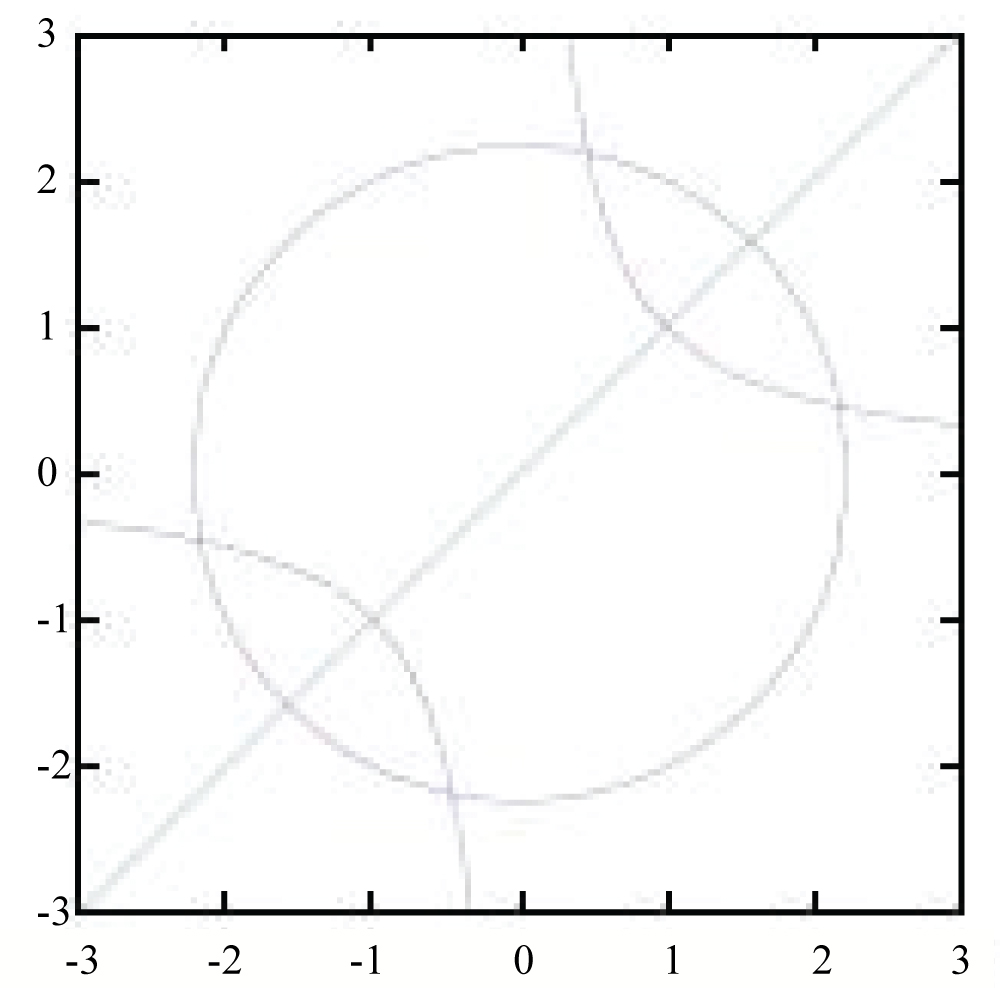

Let us consider a curve in , as in Figure 2. The curve has a singularity at where two branches intersect. Let . Then , , and . These equations make an ideal in . The affine algebraic variety has no singularity and it maps to the curve by the projection to .

Figure 2: The curve y2 = x3 + x2 with the singularity at (0; 0).

This is an example of the resolution of singularity. This sort of procedure lies in a broader concept of “normalization”. If is an integral domain, its normalization is the integral closure of in the quotient field (the field of fractions) of .

Definition 4.11 (Normal) Let be an integral domain with the field of fractions . Let f be any monic polynomial in . If every root of f also lies in , then is normal.

Example 4.39 One can prove that every UFD is normal.

Definition 4.12 (Normalization) Let be a commutative ring with identity. The normalization of is the set of elements in the field of fractions of which satisfy some monic polynomial with coefficients in .

Example 4.40 Observe that, in the above example, is in the quotient field of , and observe that the equation is a monic polynomial which guarantees that t is integral over . In fact, in order to prove that this resolution of singularity is literally the normalization, it is necessary for us to do the argument in detail. So we omit it now.

Example 4.41 Consider and . By setting and , the coordinate ring . Let us denote by . Then is a root of polynomial in , and . Hence is not normal.

Example 4.42 In the above example of the resolution of singularity, t is contained in the normalization of . As t is the root of , every element of is also a root of some monic polynomial in . (To prove the validity of this statement, we need some arguments by commutative algebra ) Hence, in the normalization of . Besides, is a UFD, hence normal. Therefore is the normalization of .

In fact, the procedure to find a normalization of is to find another ring with the normalization map ↪ . The algorithm by Decker et al. enables us to compute such normalization. If is a radical ideal, the normalization by the algorithm shall give the ideal decomposition of . The computation returns s polynomial rings and s prime ideals and s maps such that the induced map , whereby the target of (the product of quotient rings) is the normalization.

As we shall see in section 6, the primary ideal decomposition is a substitution for the solution of eiganvalue problem. It is not so surprising that we meet again the decomposition of an ideal in the desingularization of the algebraic variety. The secular equation is given as

or

Then we have to find which shall give the non-zero solution of the matrix equation

Observe that the matrix in the left-hand side is the Jacobian matrix

The singular locus of the algebraic affine set defined by is determined by the rank condition of the Jacobian matrix, namely by the condition that the Jacobian matrix is not of full rank. The desingularization is the normalization, and the latter would result in the decomposition of an ideal, by the algorithm of Decker. It is not so difficult to trace the algorithm of normalization given in [10,11].

We need some definitions. Let . For The Jacobian ideal is defined by the minors of the matrix

Where, with . Then the singular locus of is given by

Then we execute the computation by following these steps.

- Compute the singular locus . Let be a radical ideal such that contains this singular locus.

- Choose a non-zero divisor and compute . For a homomorphism from to , denoted by , and for a non-zero divisor in , .

- Let be the generators of . There are quadratic relations of the form .

- Also there are linear relations between (the syzygy) of the form

- Then a surjective map is given by ↠

- The kernel of this map is an ideal generated by the quadratic and linear relations of the form

- and it yields the extension of by

- This ring may be normal. If not, we make the extension of again. After finite steps of the successive extensions, we arrive at the normalization.

- • The criterion of normality: is normal if and only if . If this criterion is satisfied, we do not have to add extra . Then the algorithm stops.

Consider in . Let . The Jacobian matrix of is .

Then , or more simply , and . (Recall that the Gröbner basis of I is .) Hence we set as a radical ideal containing the singular locus. Choose as the non-zero divisor in . Then , since vanishes as an element in . Hence, . Let .

Then there are two linear relations

and a quadratic relation

We have the extended ring

with the ideal . This ring is normal. Indeed, after some algebra (in fact, by means of computer algebra), we can check that the singular locus of is an empty set.

(We check the emptiness by the computation of Gröbner basis, which should be generated by .) From this reason, for a radical ideal which contains the singular locus, we have (the ring itself). Hence and the criterion for the normality is satisfied.

As is integral over and actually in , the ideal is represented in two ways by the substitution for :

These two ideal lie in . As is trivial, we adopt for the purpose of normalization. In addition, when we introduce the variable , we implicitly assume that . When , the ideal is given by

Then the normalization of is given by two rings:

and

Compare this result to the example of primary ideal decomposition with the same ideal which we will compute in section 6. We observe that the normalization has done the decomposition of the ideal imperfectly.

Hensel’s lemma

Consider the problem to solve the equation

Let us substitute

in

:

Let

. Then

Hence, if

, then

Let

. Then

Hence, if

, then

Likewise, by setting , we can determine s such that . It means that we obtain the 3-adic solution of the equation

Let us consider the polynomial in . We have

Hence if ≢ or, equivalently, if ≢ , we obtain such that by the fraction.

Observe the similarity with Newton method in numerical analysis. In other words, the p-adic solution of as . This is Hensel’s lemma, stated as follows.

Theorem 4.3 (Hensel’s lemma) If and satisfies

and

≢

then there is a unique such that and .

In the lemma, there is a condition ≢ , while, from the above example, it seems that we should use ≢ for changeable . In fact, by the construction, , it follows that . Hence we assume that condition only for the starting point .

In commutative ring there is a related idea, called “Linear Hensel Lifting”, by the following theorem.

Let be a commutative ring. Let , , be univariate polynomials in and let be a ideal in . If decomposes into the product of and in , that is to say,

and if there exist polynomials s and t in such that

then, for every integer we have polynomials and such that

and

The computation of and is done in a constructive way.

Let and . And let

and

We solve the equation

so that we obtain and such that and .

Example 4.44 , and . For , we begin from

and we obtain in an iterative way. As usual, we simply write the roots of by .

Hensel’s lemma implies the existence of a power series in .

Real algebraic geometry

The computation of hydrogen molecule, presented in the introduction, is done in the real number, and in the algebraic set defined by the polynomials:

On the other hand, we can add extra constraint of the form

Hence it is important to study the existence of solutions of (A) with (B).

We review several results from real algebraic geometry from now on. (As for rigorous theory, see the book by Bochnak et al. [12] or the book by Lassere [13].)

These two statement are equivalent for an affine algebraic variety:

(i) the ideal

has real generators

.

(ii)

, (by complex conjugation)

Then we define real affine algebraic variety and real ideal.

Definition 4.13 The set of real points with is called a real affine algebraic variety.

Definition 4.14 An ideal is called as a real ideal, if it is generated by real generators and satisfies the following property:

For any real algebraic variety, is a real ideal. To see the difference between real and non-real ideals, consider , , and . The last two ideals are not real ideals.

Definition 4.15 Let polynomials. The set is called as a basic closed semi-algrbraic set. A general semi-algebraic set is the boolean combination of them.

Theorem 4.5 (Real NullStellensatz) Let us define the real radical as follows:

Then it holds that , where is the real affine algebraic set of . If is a real ideal, ; that is to say, for a real ideal , the radical ideal (by the standard definition of commutative algebra) is equal to the ideal of (the affine algebraic set of in ).

Example 4.45 For , .

Definition 4.16

From these definitions, are the sums of squares of polynomials; is the “quadratic module”, which is the set of polynomials generated by and ; M is the multiplicative monoid generated by .

Theorem 4.6 (Positivestellensatz) Let , , and be the polynomials in . The following properties are equivalent.

(i)

(ii) , , and such that . From this theorem, one can derive the following theorem, also.

Theorem 4.7 Let and be polynomials in and . Then the following statements hold.

(i) if and only if such that .

(ii) if and only if such that .

Example 4.46

The theorem asserts that

for some polynomials . (From the theorem, the set is empty if and only if , , , such that . Now we can choose .) In other words, every non-negative polynomial is a sum of squares of rational functions.

Example 4.47 Consider

.

Define

,

,

,

to be

When , these polynomials are well defined in . Then it happens that , where , ,and . In other words, the quadratic equation has no real root if and only if .

Example 4.48 Consider the secular equation (for a molecular orbital model of a simple diatomic molecule):

The problem is equivalent to

Add the constraint:

A Gröbner basis (with respect to the lexicographic order ) of the ideal is . After some algebra, reduces to with respect to . Hence we have obtained

for and . If , and it satisfies the condition of Positivstellensatz. Hence there is no real solution to the problem. (Or we say that the non-symmetric wave function such that is not the ground state of the molecule at eigenvalue .)

Noether normalization

Let be a ring extension. Remember how can be an element integral over .

Definition 4.17 An element

is integral over

if it satisfies a monic polynomial equation with coefficients

The equation is called an integral equation for s over . If every element is integral over , we say that is integral over . We also say that is an integral extension.

Definition 4.18 Let be an affine ring . Then there are elements with the following properties.

(i) are algebraically independent over .

(ii) is an integral ring extension.

If the elements y1,...,yd satisfy these two conditions, the inclusion is a Noether normalization for .

Example 4.49 Let , and let . The residuals are not integral over . But the change of the variables

makes the change in as follows:

We can prove the residuals ) are integral over . Indeed, after a hard algebra (by means of Gröbner basis theory and the variable elimination as we learn later), we affirm that the ideal contains following two polynomials

and

Example 4.50 There are several types of the variable change which conduct Noether normalization.

- with in the coefficient field , when is an infinite field.

- . We have to find an integer .

To do with the latter type is, in fact, the proof of the existence of Noether normalization. Let be a polynomial defining the ideal . By the change of variables, we obtain

If the monomial of the highest degree with respect to is originally given by

it becomes

Hence, after the change of the variables, the term of the highest degree with respect to is given by

If r is large enough, this term has the degree larger than any other monomials. Therefore is integral over . There is another way to define the dimension of the variety. Let be a proper ideal. Let . If is a Noether normalization, the dimension of is given by the number , namely,

Differential Galois theory

One of the important ideas concerning algebraic geometry, differential algebra, and quantum mechanics is the differential Galois theory.

Let us solve

We get .

Let us solve

We get . In the first case, we can get the solution in the range of elementary functions; on the other hand, in the second case, we have to do the integration.

Then there arises a question: under what circumstance one can express the solution of a differential equation using exponents, integration, and also by adding algebraic elements? We proceed step by step, by adding more elements which are constructed over the elements already presented in the computation. The analytic solution given by this way is called Liouvillian solution, although it is not always possible to construct it. The solvability condition is given by the differential Galois theory [14-16].

In the application of eigenvalue problem in quantum mechanics, we can consider the one-dimensional Schrödinger equation:

with the even-degree monic polynomial potential

In the last representation of , the polynomial is given by completing squares. Let us write the solution in the following form:

as the product of a monic polynomial and

Now we can establish the relation among the coefficients and in the polynomial potential, the eigenvalue and . The relation of this sort is given by a second-order differential equation for . As the consequence of differential Galois theory, the equation has solutions at the particular setting of , and . To solve the problem, one can utilize Gröbner basis theory and the variable elimination, which shall be explained later. If the equation is solvable, we can obtain the analytic solution [16].

Other important concepts of commutative algebra

It is advisable that the readers should consult textbooks and grasp the concepts of more advanced technical terms, such as “module”, “free module”, ”regular local rings”, “valuation”, “Artinian ring”, “affine algebraic variety”, or so. Maybe the concepts of homological algebra would be necessary, such as “functor”, “exact sequence”, “resolution”, “projective”, “injective”, “Betti-number”, and so on. Indeed, the research articles are frequented by these concepts.

Gröbner Basis Theory

The Gröbner basis theory is the key technique of commutative algebra and algebraic geometry[17-19]. The idea was first proposed by Bruno Buchberger, and the namesake is his advisor Wolfgang Gröbner.

The monomial orders

In one-variable case, we implicitly use the “monomial order” through the ordering of degrees: or . In the multivariate case, we encounter the monomials of the form xi yj zk. What should be the appropriate ordering of the monomials? In fact, there are several ways to set the order .

Let and be the monomials. And let a and b be sequence of superscripts and .

Definition 5.1 The lexicographic order.

This definition is equivalent to the following statement.

, if the left-most component of a―b is negative.

Definition 5.2 The reverse lexicographic order.

This definition is equivalent to the following statement.

, if the right-most component of a―b is positive.

Definition 5.3 The degree reverse lexicographic order:

Let

(1)

or

(2) and : . (The right-most component of a―b is positive. )

Example 5.1 Compute in . The monomials can be sorted by the different types of monomial order. (Here the degree of a monomial is given by this correspondence: .

The lexicographic order: ;

The reverse lexicographic order: z>y>x;

z3+3yz2+3x2+3z2+3y2 z+6xyz+6yz+3x2 z+6xz+3z+y3+3xy2+3y2+3x2 y+6xy+3y+x3+3x2+3x+1

The degree reverse lexicographic order: ;

The difference between the lexicographic order and the reverse lexicographic order is apparent; it is simply a reversal. The difference between lexicographical order and the degree reverse is subtle; it could be understandable by these phrases by Ene and Herzog [9],

...in the lexicographic order,

if and only if u has “more from the beginning” than v;

in the degree reverse lexicographic order,

if and only if u has “less from the end” than v...

Initial ideal

Let be a polynomial in the ring with the monomial order . The ”initial monomial” is the largest monomial included in (from which the coefficient is removed). The “initial coefficient” is the coefficient of .Hence the “leading term” is given by the product of the initial coefficient and the initial monomial: . We use an extra definition: .

The initial ideal is the monomial ideal, generated by the initial terms of the polynomials in ,

This ideal is a useful tool in the theory of Gröbner bases.

Definition of Gröbner basis

The Gröbner basis is defined now.

Definition 5.4 Let be the ideal in the polynomial ring , with the monomial order . The Gröbner basis is the sequence of elements , such that .

We could represent the polynomial by means of a sequence of polynomials in the following way (the standard expression)

such that

- (i) No monomial in the remainder r is contained in the ideal ).

- (ii)

If we say that f reduces to zero with respect to . In general case, the standard expression is not unique. From this reason, we have to use Gröbner basis, since the standard expression by Gröbner basis is unique and any polynomial reduces uniquely by this basis. For example, consider the case with , , and in the lexicographic order. There are two standard expression: and . However, the ideal is generated by the Gröbner basis , by which f reduces to zero and it has the unique standard expression.

Buchberger’s algorithm

The Buchberger’s algorithm is the standard way to generate Gröbner basis [3,6,7,9,20-22]

Let us define the S-polynomial of two polynomials and

where and are the leading coefficients of and .

One can prove that

are the Grb̈ner basis of ideal with respect to a monomial order ,

if and only if

reduces to zero with respect to for .

The computational step is as follows.

- Step–0 Let be the generating set of ideal .

- Step–1 For every pair of polynomials in the ideal , compute and its reminder with respect to .

- Step–2 If all reduce to zero with respect to , we have already obtained the Gröbner basis. If there are non-zero reminders , add to and repeat again at Step–1.

The computation terminates after finite steps.

Let us see examples.

Example 5.2 Consider and in , with respect to lexicographic order . In the beginning, . As and , . The leading monomial is . As the monomials in spoly(f,g) is not included by the monomial module , does not reduce to zero; indeed it is the reminder itself. We add to so that . Then we compute more of s-polynomials : and . Since and reduces to zero with respect to , we obtain the Gröbner basis . In fact, the initial term of is divisible that of , and , is a redundant term which we can remove from the basis safely.

Example 5.3 Consider , in , with respect to lexicographic orer . We have . We compute the s-polynomials: , , and . These three polynomials reduce to , f5=-2y2+1, and . We get and we compute the s-polynomials and the reminders. The only non-zero reminder is , to which and reduce. We have . We compute the s-polynomials and ascertain that all of them reduce to zero. Thus is the Gröbner basis. However, , , and are redundant: indeed they have the initial monomials divisible by those of other polynomials ( ) and reduce to zero with respect to . Thus we can chose as the Gröbner basis, and indeed this ideal satisfies the required property.

Example 5.4 Consider in . As , The Gröbner basis is . This Gröbner basis is never to be zero, and the set of equation does not have any solution. In other words, the affine algebraic set of this ideal is empty . Likewise, in the more complicated case, by computing the Gröbner bases G, we can check the existence of the solutions of the set of polynomial equations.

In the above algorithm the generated Gröbner basis is not properly “reduced” by the terminology of several contexts. A reduced Gröbner basis should have the following property [23]:

- The leading coefficient of each is 1.

- for all , the monomials in are not divisible by .

Gröbner basis of zero-dimensional ideal

The following statements are equivalent for a zero-dimensional ideal with a monomial order .

1. is a zero-dimensional ideal.

2. The affine algebraic set is finite.

3. If is a Gröbner basis, then, for any n, there exists such that for some .

4. is contained in finitely many maximal ideals of .

5. Let be the set of monomials in . The set is a finite set.

6. is a -vector space of finite dimension.

The statement 3) enables us to detect a zero-dimensional ideal from its Gröbner basis, if the latter contains polynomials which have initial terms such that for any .

The feature of zero-dimensional ideal, given by statement 5) and 6), will be useful for solving polynomial equations by Stickelberger’s theorem, as is explained in section 5.12.

Example 5.5 For , the Gröbner basis with respect to lexicographic monomial order is . This is the example of zero-dimensional ideals and it satisfies the statement 3).

Example 5.6 For , the Gröbner basis with respect to lexicographic monomial order is . As the ideal depicts the intersection of the unit sphere and a plane surface, it is not zero-dimensional. Although the Gröbner basis has the terms and , these terms are not initial terms. Hence this ideal does not satisfy the statement 6).

Example 5.7 For , the Gröbner basis with respect to lexicographic monomial order is . This is the example of zero-dimensional ideals and it satisfies the statement 3).

Syzygy

For a Gröbner basis , there are relations as follows

by means of the set of polynomials . (A trivial example is .) Such a set of polynomials is a “module” (which is actually a vector space), and we call it the first Syzygy and denote it by . The basis set of this module is computed from the Gröbner basis.

When the computation of Gröbner basis is completed, there are relations among the generators of the basis of the form:

Let us define

Then generates . Moreover,even if is not a Gröbner basis, we can compute through its Gröbner basis.

Example 5.8 Let us compute the syzygy of with the lexicographic monomial order . are generated by three trivial generators , and by the non-trivial one, .

Observe that the inner product of those generators and is zero. The generators form a vector space.

Sygyzy is a module, in other words, a kind of vector space generated by the vectors, the entries of which are polynomials. We can compute the second syzygy in the vector generators of the first syzygy and, likewise, the higher syzygy, too. The successive computation of higher syzygy enables us to construct the “resolution” of a module M in a Noetherian ring; the computation terminates after a certain step so that we do not find any non-trivial sygyzy in the last step [23].

Church-Rosser property

The reduction is a binary relation from one object to another, like an arrow going in one direction. We often call it the rewriting process.

Definition 5.5 A binary relation (denoted by the symbol ) has the Church-Rosser property, if, whenever P and Q are connected by a path of arrows, P and Q have a common destination R by the relation.

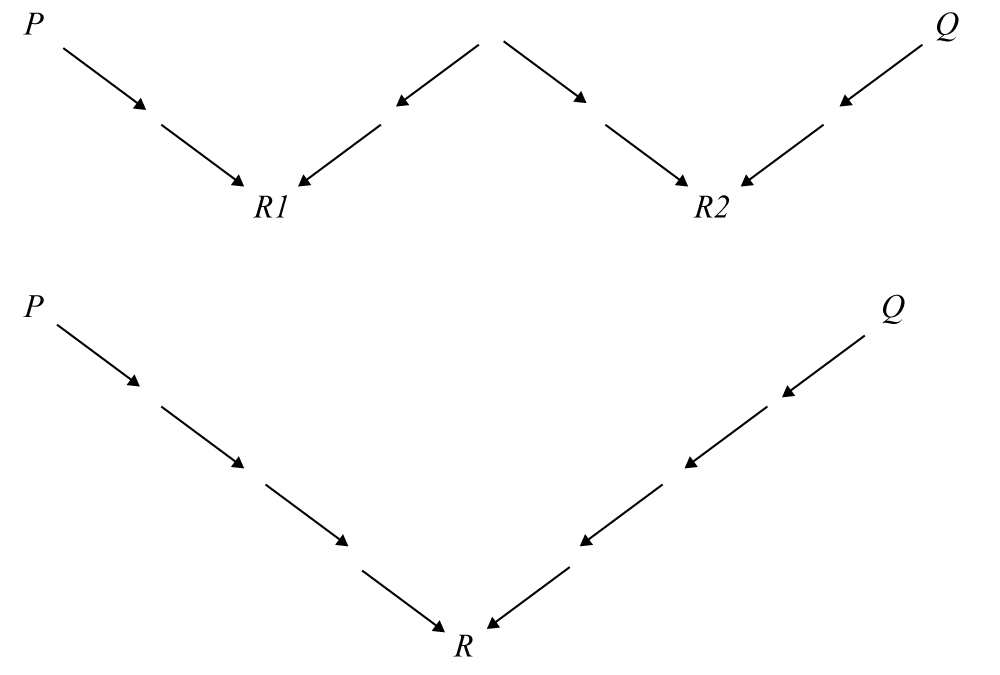

Hence we can define Gröbner bases in the other way: A basis is a Gröbner basis if and only if the reduction with respect to the bases has the Church-Rosser property, as is illustrated in Figure 3.

Figure 3: Two types of reductions by binary relations. (Upper) not Church- Rosser type; (Lower) Church-Rosser type. If the basis of an ideal is not Gröbner, the reduction goes in several destinations as in the upper figure. On the other hand, if the basis is Gröbner, the reduction is unique.

Complexity of the Gröbner basis algorithm

The original algorithm by Buchberger, as is presented here, is not so efficient. It produces a lot of useless pairs of polynomials , since such pairs give birth to s-polynomials , which immediately reduce to zero or produce redundant polynomials. There are a lot of studies about the upper bound of the complexity of Gröbner bases, such as Hermann bound [24], Dube bound [25], Wiesinger’s theorem [26], and so on. Those bounds are determined by several factors: (1) the number of variables, (2) the number of polynomials in the ideal, (3) the number of possible s-polynomials, (4) the maximum degrees of the polynomials, etc. In the worst case, the complexity is doubly exponential in the number of variables [25,27-30]. However, the computation of the Gröbner basis is equivalent to the Gaussian elimination process of a large matrix (by the row reduction of Macauley matrix) [17,29,31,32]. For the case of homogeneous polynomial ideals, the complexity in the Gaussian elimination method is bounded by

where is a constant, m is the number of polynomials [33], is the dimension of the ring, and is the maximum degree of the polynomials [33]. In order to improve efficiency, one can employ more refined methods, such as Faugère’s and . Indeed the efficiency of [34, 35] outperforms the row reduction computation of Macaulay matrix [33].

In fact, the efficiency of the algorithm is highly dependent on the chosen monomial order. The lexicographic order is convenient for theoretical study, but it consumes a considerable quantity of computational resource. Thus one often has to compute the Gröbner basis by other monomial orders (say, the degree reversible lexicographic order) in order to facilitate the computation, and then, one can remake the computed result in lexicographic order, by means of FGLM (Faugère, Gianni, Lazard, Mora) algorithm [36].

There is another problem in the Gröbner bases generation. which is apparent in practice. The Buchberger’s algorithm applies the operation of addition, subtraction, multiplication, and division to the polynomial system. It often causes a great discrepancy in the degrees of the generated polynomials and the numerical scale of coefficients in the final result. In the computation presented in the introduction of this article, one can observe such a tendency. Thus, for the practical purpose, one must utilize several tricks to keep the polynomials as “slim” as one can [37-39].

Gröbner basis for modules

Let us review what is the module. A module M is an abelian group with scalar multiplication , such as . It has the following property.

A free module is the module with a basis set . We can compute Gröbner bases of free modules. One of the purpose of this sort of computations is to study the linear equation of polynomials:

for which one might ask for the linear dependence of rows.

We define the monomial order in modules in these ways.

- Position over coefficient :

if

or

and

.

(Example: ). - Coefficient over position : if or and . (Example: ).

In the similar way as in the case of polynomial, we chose the leading terms of the elements in a module; we also compute the s-polynomials and the reminders in order that we obtain the Gröbner basis. The slight difference is that when and we use the s-polynomial as follows:

(Here we use the notation to represent the coefficient of .)

Application of Gröbner basis

Gröbner basis is a convenient tool to actually compute various mathematical objects in the commutative algebra.

Example 5.9 Elimination of variables: The intersection of ideal and the subring is computable. Let and let be a Gröbner basis of . Then is the Gröbner basis of . If we compute the Gröbner basis by means of lexicographic orders , we obtain the set of polynomials,

,

,

...

,

....

.

Thus we can easily get .

Example 5.10 Intersection of ideal and in . Let y be an extra variable. is computable by the relation . The intersection of the right-hand side is computed by the elimination of variable .

Example 5.11 The ideal quotient I:J is computable. If , then . In addition, for a single polynomial , . We can compute and obtain the generators . Then is generated by . Hence is computed as the intersection of .

Example 5.12 Saturation: it is defined for an ideal and a polynomial in a ring as follows.

Let be a new variable, and let be the ideal generated in by and by the polynomial . Then the saturation . [compsat]

Example 5.13 Radical membership: for some For all , there exists such that . . Hence , if the ideal, defined in example 5.12, has the Gröbner basis , then .

Gröbner basis algorithm in a different light

The Buchberger’s algorithm is actually the elimination in large matrices. It is the way of Faguère to do the row reduction in the large matrix. Let us see how it would work.

Consider the same problem: , , . We compute the s-polynomials and . The coefficients in these polynomials are given in the matrix.

The matrix in the right hand side is the so-called Macaulay matrix of the second order. The row reduction yields:

We obtain and and now we have the temporary Gröber basis ; and can be excluded in the consideration, because they are “top-reducible” by and easily recovered by the present .

Let us compose the third order Macaulay matrix:

The row reduction yields this:

We obtain . Now

We again compute the third order Macaulay matrix

The row reduction yields:

We obtain . Now .

We compute the fourth order Macaulay matrix:

We do the row reduction in the fourth order Macaulay matrix:

We obtain . Now . However, as yields , and as , would be . The s-polynomials for are computed now:

,

.

As those s-polynomials reduce to zero with respect to , it is proved that is a Gröbner basis.

In the above example, we process the computation carefully so that the unnecessary polynomials are removed immediately as soon as they appear; we also limit the computation in the Macaulay matrices which would be as small as possible, although these matrices might be embedded into a very large one. Indeed we have done the row reduction numerically, not symbolically, in a very large, but sparse matrix. The algorithms and by Faugère adopt these policies in a systematical way so that these algorithms are of the most efficient methods to generate Gröbner bases.

Stickelberger’s theorem

In [2], an alternative of the numerical solution, instead of Gröbner basis, is also used. This method is based on Stickelberger’s theorem. In general, for a zero-dimensional ideal , is a -vector space of finite dimension; that is to say, the vector space is spanned by the set of monomials and the result of the multiplication between two monomial is also represented by the linear combination of the monomial basis. Therefore, according to the assertion of the theorem, the operation of a monomial to the monomial basis is thought to be the operation of a matrix in . And the eigenvalue of the matrix gives the numeral value of the corresponding monomial at .

Consider . As a Gröbner basis of is , the monomial basis in is given by , from which is dropped. The transformation by and are given as follows.

The transformation matrices (by ) and (by ) are those in the right hand side in the both equations. It is easily checked that there are four eigenvectors, common both for and , which lie in :

Now we have obtained the numeral values of and at from and . In fact, the eigenvalues and also give us the value of and at . As for the value of , we easily compute it from the polynomial relation by the ideal .

Algorithm of computing Krull dimension

As we have seen, the zero-dimensional ideal is detected immediately after the computation of its Gröbner ideal. However, it takes a little of algebra to compute the non-zero dimension of an ideal [40].

- Let be an ideal. Then the Krull dimension of is given by an integer , i.e., , such that d is the maximal cardinality of a subset of variables with . (A maximal independent set of variables with respect to is a subset of variables of maximal cardinality, defined as this.)

- Let be any monomial ordering on . And let be an ideal. Then the Krull dimension of the quotient ideal is computed by means of the initial ideal of :

Hence we only have to consider the initial ideal , instead of the ideal itself.

- Let

be an ideal with monomial generators

. Let denote

by

. Define

in a recursive way

, and

. - Then .

Example 5.14 Consider .

, as . Since , .

Example 5.15 Consider . Then we have to consider and the conclusion is the same as the previous example.

Example 5.16 Consider with lexicographic order . The Gröbner basis is . Hence . Since is the set of variable of maximal cardinality with we have . (We can arrive at the same conclusion by means of the recursive function .)

Algorithm for the decomposition of the ideal as eigenvalue solver

In the example of the hydrogen molecule in section 3, we have seen that the polynomial secular equation is made into the triangular form, in which the relation of variables is clarified, almost to represent the roots themselves. The mathematical foundation of such computations is the primary ideal decomposition.

Primary ideal decomposition

There are two special sorts of ideal: prime ideal and primary ideal, as we have seen in the previous section. One can comprehend these sorts of ideal with the analogy of elementary number theory. An integer is decomposed as by the prime factorization. The ideal of the integer n is (the integer multiple of ); and it is represented by as the intersection of the ideals generated by certain powers of primes.

An ideal in the commutative algebra, likewise, could be decomposed as the intersection of primary ideals [3]:

One of the most elementary procedure for primary ideal decomposition is summarized as follows [41]. Let be the ideal in a Noetherian ring , and are elements such that , and .

1. Find such that .

2. Let and .

3. The ideal and are larger than . The decomposition process must be done for each of them. By choosing some proper , we can decompose and , hence by primary ideals.

Example 6.1 Consider in with the lexicographic order .

- is not in the radical ; and . Thus we set and

- Let . This is a primary (in fact, a prime) ideal, and (stabilized).

- Let : this is because of another representation . The ideal can be decomposed again.

- • Now take , .

- . This ideal is primary (in fact, prime).

- and (stabilized). This ideal is primary (in fact, prime).

Now we have done the primary ideal decomposition: .

We should notice that the ideal is the secular equation of the diatomic system,

The primary ideal decomposition is the operation equivalent to the eigenstate computation by means of linear algebra: the solutions of the secular equation are given by the affine algebraic sets of the computed ideals. We obtain the eigenvalue and for which the eigenvectors are and .

In fact, the above example is one of the simplest cases which we can compute manually. The general algorithm for ideal decomposition is first proposed by Eisenbud [42]; and practical algorithms are developed by Gianni, Trager and Zacharias [43], by Shimoyama and Yokoyama [44], by Möller [45] and by Lazard . (As for the semi-algebraic set, one can do similar decomposition, as is studied by Chen and Davenport [46].) The comparison of algorithms is given in [10].

Algorithm of Gianni, Trager, and Zacharias

In this section, we review the primary ideal decomposition algorithm of Gianni, Trager, and Zacharias (GTZ algorithm) [18, 40]. In the algorithm which we have seen in the previous section, we have to search a “key polynomial” by trial and error to decompose an ideal. In contrast, GTZ algorithm enables us to find such a key in a more rational way.

Definition 6.1

- A maximal ideal is called in general position with respect to the lexicographical ordering with , if there exist such that .

- When an ideal is decomposed by primary ideal decomposition , the prime ideals are called associated primes of . is a minimal associated prime of if for all .

- A zero-dimensional ideal is called in general position with respect to the monomial order with ), if all associated primes are in general position and for .

Theorem 6.1 (Zero-dimensional Decomposition) Let be a zero-dimensional ideal. Let , with for . Then the decomposition of ideal is given by .

If I is in general position with respect to the monomial order with , then are primary ideals for all .

Theorem 6.2 (Ideal decomposition in general case) Let . Let be an ideal, and let be a maximal independent set of variables for . Then these statements hold.

(i) The ideal ] generated by in is zero-dimensional. We denote the field of rational functions on with variables u by .

(ii) Let be a Gröbner basis of . Let . Then . If , then .

(iii) If = is an irredundant primary decomposition, then is also an irredundant primary decomposition.

Example 6.2 Consider in with lexicographic order . We know a Gröbner basis of I is .

- Choose as a maximal independent set of variables.

- . This ideal is a Gröbner basis in .

- Then .

- . And . We have a decomposition .

- We decompose . As is zero-dimensional in , we apply the algorithm in zero-dimensional case. Since , the decomposition of is given by . As the ideal is in general position with respect to lexicographic order in , and are primary ideals. Notice that we are working in . However, as the decomposition of inherits that of , we have obtained the required decomposition.

Triangulation of polynomial system

In case of the systems of polynomial equations which have only finitely many solutions (i.e. to be zero-dimensional), several methods methods are proposed [45,47] which decompose the solution set into finitely many subset of the triangular form with respect to the variables entries,

This kind of algorithm is used in the application of algebraic geometry in molecular orbital theory as is demonstrated in section 3, instead of conventional linear algebra.

Let us review the triangular decomposition algorithm by Möller [45]. The algorithm is based upon several lemmas.

Lemma 6.1 (Lemma 2 in [45]) Let A be an ideal in a ring such that Krull dimension of the residue class ring is zero : . If is an ideal such that and if is sufficiently large, the affine algebraic set is the disjoint union: with

.

(The definition of of this theorem, given in [45], is slightly different from the conventional one. In this section only, we use this definition.)

Lemma 6.2 (Lemma 3 in [45]) Let A is a ideal in a ring such that Krull-dimension of equals to zero and let be another ideal in such that . Then, for sufficiently large

with . In addition, if A is a radical ( ), the above relation holds for all positive .

Lemma 6.3 (Lemma 5 iii) in [45]). Let with nonzero polynomials , .

If is a Gröbner basis with respect to a monomial order <, then is a a Gröbner basis with respect to .