Journal Name: Journal of Multidisciplinary Research and Reviews

Article Type: Research

Received date: 03 March, 2020

Accepted date: 24 August, 2020

Published date: 31 August, 2020

Citation: Kuoch SK, Nowakowski C, Hottelart K, Reilhac P, Escrieut P (2020) Design Principles behind the Valeo Mymobius Concept: Creating an Intuitive Driving Experience for the Digital World. J Multidis Res Rev Vol: 2, Issu: 2 (01-10).

Copyright: © 2020 Kuoch SK et al. This is an openaccess article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Abstract

As we approach the 135th anniversary of the automobile, two industry trends, automation and digitalization, are rapidly revolutionizing the thus far, relatively unchanged automotive user experience. This paper describes a case study based on the development of the Valeo MyMobius user interface concept. The goal of this project was to explore how to achieve an intuitive driving experience as the automotive industry undergoes transition from primarily analog to primarily digital interfaces and from physical buttons to multimodal interactions. To achieve the perception of intuitiveness, designers must understand their users, find and reduce physical and cognitive friction points, and bridge knowledge gaps with interface designs that facilitate discovery and learnability. The Valeo MyMobius concept featured steering wheel touch displays that supported quick, frequent menu selections using swiping gestures (common in smartphone interactions) and reinforcing icons (to facilitate learnability). Learning algorithms personalized the experience by tailoring suggestions, while more complex interactions were handled with a conversational voice assistant, which also served as a driving copilot, capable of contextually suggesting when Advanced Driving Assistance System (ADAS) features such as ACC could be utilized. The visual design aesthetic embodied Kenya Hara’s design philosophy of “Emptiness,” reducing visual clutter and creating spaces that are ready to receive inspiration and information. Altogether, the Valeo MyMobius concept demonstrated an attainable future where the perception of intuitiveness can be achieved with today’s technologies.

Keywords

User experience, UX, user interface, User interaction, Automotive cockpit design, Intuitive driving, Diving automation, Digitalization, Personalization, Valeo Mobius, Valeo MyMobius.

Abstract

As we approach the 135th anniversary of the automobile, two industry trends, automation and digitalization, are rapidly revolutionizing the thus far, relatively unchanged automotive user experience. This paper describes a case study based on the development of the Valeo MyMobius user interface concept. The goal of this project was to explore how to achieve an intuitive driving experience as the automotive industry undergoes transition from primarily analog to primarily digital interfaces and from physical buttons to multimodal interactions. To achieve the perception of intuitiveness, designers must understand their users, find and reduce physical and cognitive friction points, and bridge knowledge gaps with interface designs that facilitate discovery and learnability. The Valeo MyMobius concept featured steering wheel touch displays that supported quick, frequent menu selections using swiping gestures (common in smartphone interactions) and reinforcing icons (to facilitate learnability). Learning algorithms personalized the experience by tailoring suggestions, while more complex interactions were handled with a conversational voice assistant, which also served as a driving copilot, capable of contextually suggesting when Advanced Driving Assistance System (ADAS) features such as ACC could be utilized. The visual design aesthetic embodied Kenya Hara’s design philosophy of “Emptiness,” reducing visual clutter and creating spaces that are ready to receive inspiration and information. Altogether, the Valeo MyMobius concept demonstrated an attainable future where the perception of intuitiveness can be achieved with today’s technologies.

Keywords

User experience, UX, user interface, User interaction, Automotive cockpit design, Intuitive driving, Diving automation, Digitalization, Personalization, Valeo Mobius, Valeo MyMobius.

Introduction

Automotive user interfaces

The automotive user experience remained relatively unchanged during the first 100 years of the automobile. The familiar steering wheel, gear shift, and gas and brake pedals controlled the vehicle motion, and various shapes, and sizes of mechanical, and later electro-mechanical, controls dominated the in-vehicle user interface. Even into the 1990’s, the in-vehicle user experience was primarily focused on the driving task, and the user interface was limited to displaying vehicle status and providing limited audio entertainment [1]. However, as we approach the 135th anniversary of Karl Friedrich Benz’s first automobile, the automotive industry finds itself on the precipice of change. Two industry trends, automation and digitalization, were rapidly revolutionizing the driving experience as we know it.

While forecasts may differ widely on when driving automation will be ready and how fast it will permeate the market, there is a strong case for a future that includes a mix of vehicles with various levels of driver assistance and situation limited automation that will still require manual driving under certain conditions [2]. However, as vehicles become more highly automated, the driver will find himor herself as a part-time driver and part-time passenger, or as we’ve previously coined, a drivenger [3]. As this shift happens, one thing is clear, the user experience will need to evolve, because drivers will not be content to hold the steering wheel and stare at the road waiting for a request to take-over driving. This realization was the inspiration behind the initial Valeo Mobius® concept [2], and it still applies to the Valeo MyMobius concept described in this paper.

The second industry trend, digitalization, had been used to refer to everything from internet-based vehicle sales, marketing, and customer relationship strategies to the increasing adoption of digital technologies in the vehicle, touch screens, Wi-Fi and cellular connectivity. More recently, it has also been used to refer to connected supply chains, driving automation, Mobility as a Service (MaaS) [4]. As of 2017, the worldwide market for automotive touch screens reached 50 million units and was forecast to continue to grow at a rate of 10 percent per year [5]. These trends are not surprising. New in-vehicle features and functionality have always mirrored the evolution of consumer technologies found outside the vehicle [1]. Automotive radio was introduced in 1922 [1], mainstream car phone service was introduced in 1982 [1], and even the first in-vehicle CRT (cathode-ray tube) touchscreen dates back to the 1986 Buick Riviera [6]. In-vehicle entertainment systems have progressed from radio, to cassette, to CDs, to digital media players. Navigation systems date back to 1994 in Europe on the BMW 7 Series [7] and 1995 in the U.S. with the Oldsmobile GuideStar system [8].

However, the user experience surrounding the digitalization trend is more than simply replacing one audio format with another or replacing paper maps with electronic versions. The shift to digital entertainment has had profound impacts on the user experience. Radio allowed us to listen to audio entertainment, but we could only listen to what was being broadcast during the time that we were in the car. Cassettes, CDs, and digital media not only gave us more listening options, but they allowed us to time shift our listening. The programs we want to listen to are available when we are available to hear them, and now, with added connectivity, practically every audio program ever created is available at any time of our choosing! The user experience has radically changed, but with it comes the user interface design challenge of how to intuitively enable such broad functionality.

Similarly, the shift from paper maps to navigation systems also fundamentally changed the automotive user experience. Prior to the shift, trips to new destinations required pre-trip planning, visually calculating the optimal route, and reducing it to a list of street names and turn directions. Now, all of that work is essentially automated by navigation systems. How to get where we are going, what’s the best route to avoid traffic, and even when to leave in order to reach our destination ontime are all concerns of the past.

Valeo MyMobius concept: A case study

In prior research, Valeo conducted an investigation to find out what users might perceive as “Intuitive Driving”, especially in the context of increasing driving automation [9,10]. We asked participants what intuitive meant to them, showed them some user interface concepts, and worked to understand how to go about creating an intuitive user experience. From an automotive user’s perspective, “intuitive” means “natural,” and “natural” means acting without thinking. Users want to be able to interact with an automotive user interface quickly, easily, and with the least amount of effort as possible. Their reactions to the Valeo Mobius® concept were positive, and further testing of the concept showed that the design enabled a fluid, continuous exchange of agency between human and vehicle, allowing drivers to maintain situational awareness and minimizing response times when a transition of control from automated to manual driving was required [11].

Building on the Valeo Mobius® research, this paper focuses on a case study of how the concept was extended to meet user needs as automotive interfaces transition into the new digital world. Although some example user journey maps may be described, the goal of this paper was not to detail the entire automotive infotainment user journey map or create a comprehensive hierarchical task analysis. The goal of this study was to review what philosophies, principles, and trends most influenced the design decisions behind the Valeo MyMobius concept. For the purposes of this study, the term “user” simply refers to typical and capable automotive drivers.

User Experience Design Philosophy, Principles, and Trends

MyMobius concept philosophy

The basic philosophy behind the Valeo Mobius® concept was to develop an intuitive automotive user experience in a market trending towards increasing vehicle automation. The rise of the digitalization trend saw the addition of the word “My” to make the concept into MyMobius, representing an evolution in our design thinking to reflect a new emphasis on personalization as a future element of intuitiveness. In developing the Valeo MyMobius concept, we set out to rethink the focus on current touch interactions and explore multimodal, digital interface possibilities. Technology is no longer the limiting factor in design, rather we have reached a point where it is the imagination of the designers that limits the potential experiences.

As part of the automation and digitalization trends, there has been an explosion in the number of driving assistance features and infotainment system functions, resulting in a corresponding increase in the number of buttons, controls, menus items, and vehicle real estate devoted to these tasks. There are simply more features, options, and parameters that can be manipulated with dedicated controls. Multifunction displays have allowed us to consolidate space, trading a breadth of switches for depth in menu structures. Instead of having many single function controls, users must now navigate the complex, deep menu structures made possible through digital user interfaces. It doesn’t take long to find articles heralding that modern cars have become too complicated, and even downright annoying [12,13]. The early results of the automotive digitalization trend resulted in frustration, the polar opposite of intuitive, but what we hope to show in this paper is that designers already have the tools to design intuitive digital interfaces by supporting both learnability and memorability, two key usability metrics affecting the perception of intuitiveness.

What is an intuitive design?

In the article “What’s Makes a Design Seems Intuitive” [14], Jared M. Spool explains that an interface can’t, by essence, be intuitive. User interfaces are pre-programmed. They cannot intuit anything; they simply execute. People, on the other hand, intuit, deduce, and understand. When people perceive an interface as intuitive, they consider the interface to have helped them more easily intuit.

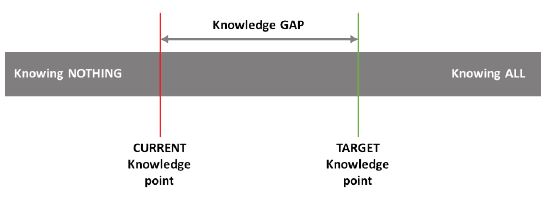

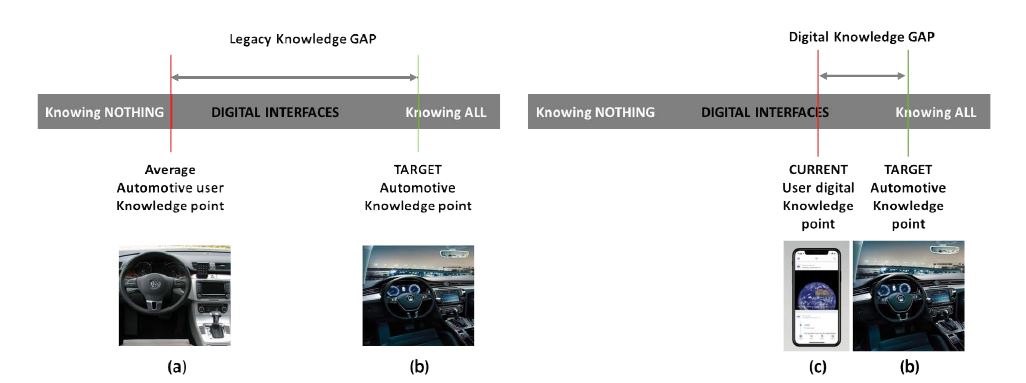

Thus, to be able to design an intuitive experience, we must first know the user, understand their perceived affordances, prior experience, and preconceived mental models, which allow us to predict how they might try to interact with a given interface. Essentially, we must understand the users’ knowledge space. Jared M. Spool considered knowledge space as a single axis continuum (Figure 1), starting from knowing nothing and ending at perfect knowledge about the interface [14]. If current knowledge represents the knowledge the user has when they first approach an interface and target knowledge is the knowledge the user needs to achieve their desired task, then it is possible that user’s current knowledge falls short of the target knowledge, leaving a knowledge gap.

Wherever there is a potential knowledge gap, there is also an opportunity for design to shine. In these situations, the design of the user interface must provide the bridge across the knowledge gap. An intuitive design can both guide the user across the knowledge gap and increase the user’s knowledge, consequently reducing future knowledge gaps. Conversely, a design that fails to bridge the knowledge gap, results in an un-intuitive experience for the user. The ultimate goal of user-centered design is to understand the user, predict potential knowledge gaps, and design user interfaces capable of bridging those knowledge gaps.

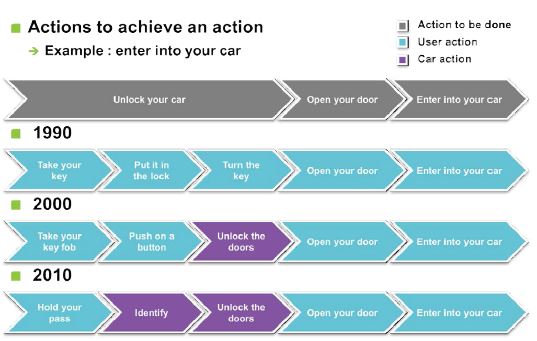

Designing away physical and cognitive friction points

One design strategy which has been used successfully in our past internal research has been to identify physical and cognitive friction points (sometimes referred to as pain points) in the user experience using methods such as surveys, interviews, focus groups, and naturalistic observation. Once friction points are identified, designers work to reduce these friction points, hopefully bringing the resulting user experience one step closer to intuitive. One example of this strategy can be illustrated by the user journey map evolution of vehicle entry (Figure 2). The seemingly simple act of entering the vehicle actually requires multiple steps leading to many potential friction points in the experience.

Through the 1990s, vehicle entry involved physical, mechanical keys. The first step to vehicle entry was unlocking the door. This step involves understanding that the car requires a key, knowing which key opens the doors as many cars had two keys, one for the doors and one to start the vehicle. Next, the user needed to figure out where to insert the key and which direction to turn the key once inserted. Some friction points, such as the direction to turn the key, were helped with standardization across vehicles, but many other friction points could be easily identified with a little observation. For example, often drivers approach their car with your hands full, and a physical friction point could plainly be seen as they attempted to dig the key out of their pocket while juggling bags or packages.

As we entered the 2000s, remote keyless entry systems were introduced. Now, unlocking your car could be accomplished from a distance by pressing a button on the key or key fob. This reduced some of the physical and cognitive friction points previously associated with vehicle entry. Now there was only one key, and less physical effort was required to unlock the vehicle. The driver could unlock the vehicle even with their hands full, as long as they had the forethought to pull the key out first.

Enter the modern proximity keyless entry systems. The current state of the art keyless entry system no longer requires you to pull your key out of your pocket. As long as the key is on the driver, and the driver is within a certain proximity of the vehicle, the car will automatically unlock when the driver pulls on the door handle. Viewed without knowledge of the underlying technology, it is now almost as if the car intuitively knows who is allowed to enter!

Figure 1:Maize plants shows no phenotypic differentiation between control (a) and stressed (b) plants under waterlogging x low-N stress (5 days after stress). The stress symptoms are not visible in treated plants like leaf wilting, chlorosis/necrosis, lodging and white tips on the surface rooting.

Figure 2:The evolution of user experience with vehicle entry systems.

We can see that through the evolution of the technology and design, the user experience can be made to seem more intuitive as the physical and cognitive friction points are reduced, and future systems, such as the Valeo InBlue® virtual key, may eliminate the friction point of needing to even carry a key. With the InBlue® system, there’s no need to transfer a physical key to grant access to your car, the key can simply be loaned digitally and securely through a smartphone or smartwatch application.

Automotive users’ current knowledge point

The automotive industry has been in existence for over a century, and as described earlier, the technologies of the day have always influenced and even driven the evolution of the in-vehicle user experience [1]. The current industry automation trend has been enabled by sensor and computing technologies first deployed for Advanced Driving Assistance Systems (ADAS), and the digitalization trend has been enabled by LCDs, touch screens and surfaces, and nearly ubiquitous connectivity. However, the automotive industry moves slowly, especially when it comes to new design trends. To mass produce the modern marvel that is today’s automobile, vehicle design must start anywhere from 2 to 5 years before the first car rolls off the assembly line.

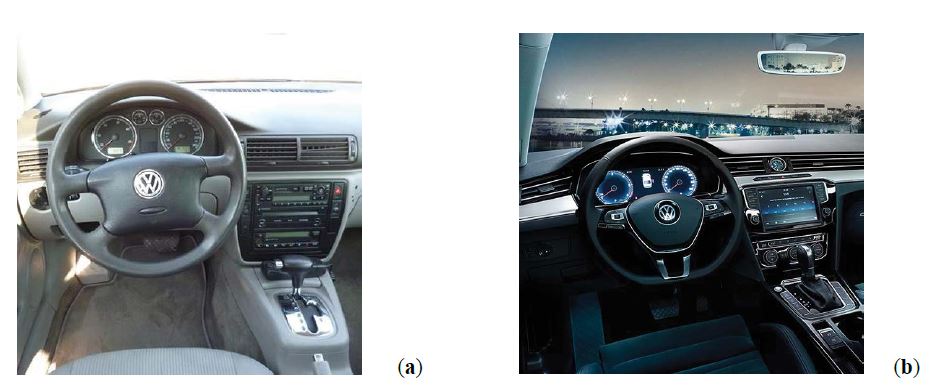

What we’ve seen is that even as the vehicle cockpit goes digital, the digital designs tend to maintain legacy appearances, such as simply replacing a physical button with a digital button on the touchscreen. Digital versions of legacy controls and interfaces are one strategy that can be used to help previous users transition from an analog world to the digital one. Users who have been driving a particular brand of car for years will have anchored their expectations of where to find certain controls and how those specific controls should function, especially given that physical push buttons, rotating knobs, and gauges with needles have all but defined the modern automotive cockpit. Novelty, on the other hand, can lead to a knowledge gap and actually hurt usability and intuitiveness.

Figure 3:Photosynthetic rate of control and stressed plants under waterlogging x low-N drought x low-N stresses. Mean values of Pn rate and internal CO2 concentration (Ci) are significant at p<0.05.

Although digital interfaces open almost infinite visual interface possibilities, comparing the VW Passat user interface from 2000 with a newer 2016 user interface (Figure 3), the cockpit layout is entirely recognizable. Although the 2016 model is equipped with several full-color, LCD and touchscreen displays, the new digital interface is more evolutionary, rather than revolutionary. In fact, the 2016 VW Passat digital instrument cluster essentially mimics the analog needle and gauge interface found in the 2000 model. This technique of displaying a digital version of a real object is called skeuomorphism, and it has been used to engage and transition users from a physical world into the digital world since the advent of computers.

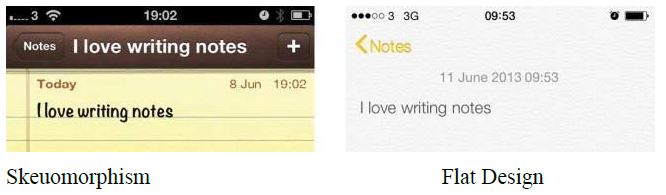

Skeuomorphism as a digital transition strategy

Skeuomorphism was widely used in the smartphone industry after the first introduction of the iPhone in 2007. The world transitioned from small devices with physical buttons and small, low resolution displays to a high resolution, full capacitive touch display and few physical buttons. One famous example of skeuomorphism is the Notes application on iOS which mimics the well-known yellow ruled legal pad, complete with suggesting that pages have already torn off the pad (See Figure 4.). Even the font was designed to look handwritten, further adding to the illusion of the digital interaction identically mirrored the physical object upon which the metaphor was based.

The skeuomorphism technique dominated both Apple iOS products and the smartphone market in general for 7 years before the designers at Apple made a widely controversial switch to flat design in iOS 7. The flat design was heralded as fully embracing the shift from the physical world to the digital world, putting the focus on the content, instead of on increasingly realistic representations of the physical world. The difference between skeuomorphism and flat design can be seen in Figure 4. In addition to revolutions in visual design, the smartphone has pioneered new models of interaction with the digital world. Direct manipulation and multi-touch gestures have become intuitive anchors for digital users, as these kinds of gestures now feel as natural as if you are moving real objects in the real world.

Figure 4:Apple Notes App iOS 1 (left) and iOS 7 (right)(3). 3Credit: https://drfone.wondershare.com/fr/notes/share-notes-iphone.html

Figure 5:Legacy automotive user vs. digital user knowledge gaps.

Emergence of gesture-based interaction

A 2010 study on touchscreen gestures from roughly 340 participants across 9 countries [15] found that desired gestures tended to be similar across both culture and user experience level. There were surprisingly few cultural differences found in the study. Furthermore, as hypothesized by Nick Babich [16], “One of the main reasons gesture controls feel so natural and intuitive to us is because they resemble interacting with a real object...The rise of touch and gesture-driven devices dramatically changes the way we think about interaction. Animation paired with gesture-control essentially tricks the brain into thinking that it’s interacting with something tangible. So we become that much more immersed in the experience.” In his opinion, gestures offer:

• Less clutter

• More delight

• More potential

Apps that rely on gesture control result in designs that are less cluttered, since digital representations of physical buttons can be removed from the screen real estate. Similar to the benefits of removing skeuomorphism, this allows the user interface to be more content-focused. Delight, once gesture is learned, comes from reducing the number of steps required to complete a task, and the increased potential comes allowing users more freedom and greater efficiency than could be achieved with more traditional rigid button and menu structures. One example of the potential of gesture-based interfaces was demonstrated in the work of Matthaeus Krenn [17]. In his work, location agnostic gestures showed great promise in an automotive setting because they eliminate the issues associated with making precise movements to fixed button locations in an environment prone to vibration and bumps.

A new hypothesis for digital users’ current knowledge point

The transition path towards digital interfaces in the smartphone industry foreshadows a similar path already underway in the automotive industry. Fully digital instrument clusters and infotainment systems are already pervasive in even moderately priced cars such as the 2016 VW Passat pictured in Figure 4. Although automotive user interfaces have been slow to make the leap from legacy analog to fully digital, the transition is not only occurring, but also accelerating. As this transition happens, the danger is that the benchmark for the average automotive user’s current knowledge will also transition, but at a different rate.

As designers, we might postulate that a knowledge gap exists between the legacy automotive user knowledge and the target knowledge of the fully digital automotive user interface as depicted in Figure 5 when comparing (a) and (b). Under this hypothesis, it might seem reasonable to conclude that the transition to digital interfaces should proceed slowly and cautiously, relying on teaching mechanisms, such as skeuomorphism, to lead the users into the new automotive digital age.

Alternatively, one could also postulate that when presented with a digital automotive interface, current user knowledge will be based on a user’s experience with smartphones, rather than their previous experience with automotive interfaces. In this case, the knowledge gap between smartphone experience and new digital automotive user interfaces would be considerably smaller as depicted in Figure 5 when comparing (c) to (b). This alternate hypothesis would suggest that automotive designers could move quicker and be bolder. But, is there any disadvantage to moving slowly?

Although it may seem safe to always assume that user knowledge will be based on previous automotive experience, this strategy can actually result in more friction in the user experience. If current user knowledge is based on their smartphone experience, and designers move slowly, holding onto the digital equivalent of analog interfaces, then the reality of the situation is that the knowledge gap will be much larger than anticipated. Smartphone users will experience frustration and cognitive friction as they try to interact with the automotive digital interface as if it were a smartphone, and the interface does not respond correctly. As an example, pinch-to-zoom is one of the most ubiquitous smartphone gestures related to mapping applications, yet even in 2018, very few automotive infotainment systems have implemented this gesture. The resulting need to find an alternate means to activate the feature can leave users feeling frustrated and considered the user interface as being hard to use, obsolete, and far from intuitive.

Valeo Mymobius Concept

Touch interaction

Figure 6:Picture of the steering wheel.

The Valeo MyMobius design was rooted in the assumption that as we enter the age of the fully digital car, the benchmark for user knowledge will shift to their experience with smartphones, rather than their past experience with automotive interfaces. As with smartphone user experience, touch interaction will continue to play a critical role in automotive user experience. However, one of the great challenges in automotive user interface design is that the user interface must support two types of tasks: the primary tasks of driving and secondary tasks when interacting with any of the other features available in the vehicle. Furthermore, until we reach fully driverless vehicles (without vehicle controls), the cockpit will be constrained by the vehicle controls. For example, the steering wheel will likely continue to serve as a resting place for the driver’s hands, and the location of the steering wheel will hinder direct manipulation of the instrument cluster. Furthermore, the motions of the steering wheel and the requirements for safety systems such as airbags will constrain the design of any steering wheel controls. The Valeo Mobius® intuitive driving concept tackled this challenge by replacing the traditional mechanical steering wheel switches with reconfigurable touch displays affording swiping gestures as shown in Figure 6.

The Valeo Mobius® steering wheel touch displays were developed using a user-centered design process, including early involvement of end users in all stages of the innovation and design process. New concepts and prototypes were constantly created and evaluated in terms of usability and acceptance using a variety of methods from focus groups to driving simulator studies. The overall concept was perceived as very appealing and attractive, and the steering wheel touch displays were rated as both intuitive and innovative [9].

Some improvements from user testing that were incorporated into the Valeo MyMobius concept include using a mixture of swiping touch gesture to navigate but adding a physical push to validate the selection. Feedback showed that users tended to find the haptic feedback of a physical push to be reassuring. The physical button push also eliminates false selections when the user intended a swiping gesture or when the user brushed against the touch displays while using the steering wheel to control the vehicle. The MyMobius implementation mounted the touch displays within pivoting buttons, so pushing the entire display or the frame around the display triggered the selection and validation of the current command. (The same effect could also have been obtained with pressure sensitive touch displays and haptic feedback.)

From the perspective of our digitalization case study, the main advantage of the steering wheel switch touch display is its ability to afford learnability in a reconfigurable interface. The visual cues shown on the touch display, help the user to understand what action will be triggered by pushing to confirm the selection. Learnability is further reinforced by using the same visual icon in both the instrument cluster display and steering wheel touch display to represent the command that will be triggered when the button is pushed. While experienced users may never look down at the steering wheel switches, novice users who are just learning to use the system can benefit greatly from these types of visual cues meant to help guide them through a novel interface.

Voice interaction

One of the MyMobius design goals was to explore what principles should guide future multimodal interactions in the vehicle. Touch interaction can offer the advantages of speed and precision. Simple gestures and steering wheel button presses can often yield sub-second task times with highly consistent and predictable results, especially once the user is familiar with navigating the system. However, tasks using touch interaction quickly move from simple to complex as designer trade breadth, having more dedicated singlefunction buttons, for depth, using multifunction buttons to navigate a complex menu structure. Touch interaction is also both visually and manually resource intense, and if the user is actively controlling the vehicle, the prevailing research recommends limiting these types of interactions to limit the potential for driver distraction. In the U.S., the National Highway Traffic Safety Agency (NHTSA) has recommended limiting visual-manual tasks performed while driving to only those that can be completed with glances away from the road of less than 2 seconds and total eyes-off-the-road times of less than 12 seconds [18]. These guidelines roughly limit the breadth of menu items per glance to about 5 or 6, and the depth of the menu system to about 6 levels to complete any given task. With these constraints, designing an interface to find a specific song among thousands becomes challenging.

As the complexity of interactions with in-vehicle systems increases, providing the best user experience with touch interaction alone may not be possible. In keeping with our earlier example, finding a specific song using a touch interface may take many gestures or clicks, but through a voice interface, that same song could be accessed directly in a single command, if the system accurately understands the speech given the noisy vehicle environment. Many high-end vehicles already offer voice command interfaces, but these interfaces have been extremely limited, relying users to learn a specific set of commands and syntax to interact with the system. Recent advances in natural language processing have begun to enable conversational voice assistants such as Amazon’s Alexa, Apple’s Siri, Google Assistant, and Microsoft’s Cortana, and this technology will go a long way towards enabling intuitive voice interactions within vehicles. Designing for the best of both worlds, the Valeo MyMobius user interface paired the touch display interface for frequent quick actions with and a conversational voice assistant to more directly access deep menu items.

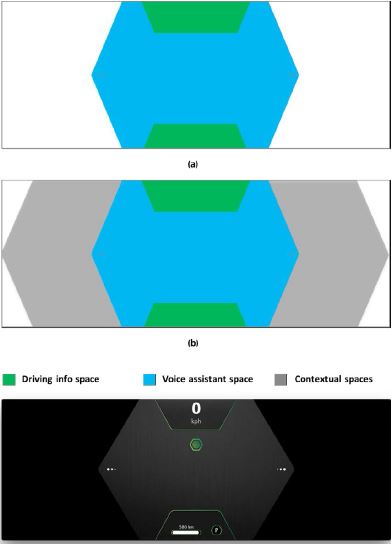

Visual design: Full of emptiness

The starting point for the MyMobius visual concept came from Kenya Hara’s MUJI design philosophy where he described his idea of “Emptiness” [19]. Emptiness, as he explains, is not simplicity or minimalism, but “Emptiness is a receptacle for creativity.” Applied to automotive user interfaces, emptiness is not about clearing the digital spaces and removing information, but rather it is about creating spaces that are ready to receive inspiration and afford the display of information. As shown in Figure 7, the instrument cluster user interface is divided in several areas, and each area is dedicated to a specific category of information.

The green zones are reserved to always display critical car-related information like speed, tell tales, driving state, and turn indicators. The blue zones are reserved to support voice assistant interactions. The gray zones, normally hidden or “empty”, are reserved for contextual feature interactions, such as navigating music, contacts, vehicle navigation settings, and notifications. The emptiness of the contextual spaces invites the user to interact with and fill those spaces. However, the major design challenge that we found was how to cue or entice the user into discovering what interactions were possible to fill the “empty” spaces. The solution was adding the three-dot icon shown in Figure 7 (c). The three dots subtly increased in size in one direction to indicate that a swipe in that direction was allowed and would activate an interface feature. As described in the previous section, this visual cue was shown on both the instrument cluster display and on the corresponding steering wheel touch display to afford learnability. Thus, a swipe left on the left steering wheel touch display opens the left user interface drawer, and a swipe right on the right steering wheel touch display opens the right user interface drawer. Once user made the association between the three-dot icon and the swipe gesture, this visual cue revealed what next actions were possible with the user interface.

Personalization

Profiling and personalization are integral parts of making the MyMobius concept seem more intuitive. Learning algorithms monitor vehicle occupancy, location, driving behavior, and feature usage to contextually learn user habits. Profiles can be built to predict who is driving, where they are going, what type of music or entertainment media is desired, and what calls might be made during a drive. The learning engine could then feed the user interface with a list of most probable destinations, music selections, and contacts. The MyMobius interface (Figure 8) afforded quick access to the top predicted options through the touch display interaction. By reducing the visual-manual interactions to the top predictions, the user interface dramatically reduced the number of steps to reach the desired selection, making the interface seem more intuitive. If the predictions were not relevant, the user could always trigger the voice assistant to provide additional options through the voice interface.

Contextual feature suggestion

Discovering a new vehicle’s capabilities is a process that should be transparent, at least when it’s well designed. Unfortunately, most of the time, users are not aware of their vehicle’s full capabilities and end up not using their vehicle to its full potential. Rather than requiring users to read the owner’s manual to discover new features, the Valeo MyMobius concept envisioned that an assistant could embody the role of a coach or copilot, facilitating a better understanding of the car’s capabilities. An intelligent and predictive assistant could play a key role in the discovery phase, creating a more natural dialog between the user and the vehicle.

As an example, the same vehicle sensors used to enable ADAS and driving automation constantly collect data, even when those systems are not in use. Learning algorithms can use that data to understand the driving context, the driving style, and the driver’s preferences. The assistant can learn desired routes, speeds, and following distances. With this knowledge, the assistant can continually preconfigure the Adaptive Cruise Control (ACC) feature with the driver’s desired speed and preferred inter-vehicle following gap on a particular road under any number of weather and traffic conditions, so that the system is always ready to be engaged with a single click. Furthermore, if the users are unfamiliar with, or underutilizing certain features, like the ACC, then the assistant could suggest engaging that feature on ideal roads and under ideal weather and traffic conditions, where the feature would be appropriate to use. Conversely, if the driver is approaching a section of road with traffic that may not be conducive for ACC operation, the assistant could warn the driver to be vigilant for stopped traffic and ready to disengage the ACC system.

In another example, the assistant might suggest activating Valeo XtraVue®, a connected vehicle, camera sharing concept that would allow a driver attempting to overtake a slow-moving vehicle to “see through” that vehicle and visually confirm that it is safe to overtake. If the assistant detected that both vehicles were equipped and the driver was following unusually close, then the assistant would automatically prompt the user to activate the system. Since such a system is usable only in certain, infrequent circumstances, especially at low market penetration, the user shouldn’t be burdened with understanding the details of the system. The assistant should simply suggest using the system when it is available and may benefit the user.

As discussed earlier, in the Valeo MyMobius design, space was allocated in the center of the instrument cluster for the conversational assistant’s visual interactions. Similar to how the voice assistant interprets conversational style interactions using natural language processing, the visual component of the MyMobius assistant mirrored a conversation, appearing as a text or chat dialog. The conversational assistant also supported multimodal interaction. If the system suggested that the driver can engage the ACC, the reconfigurable steering wheel touch displays would allow the driver to confirm or reject the suggestion with a single click. This one click activation reduced the effort of searching for how to activate the suggested feature.

Figure 7:MyMobius digital cluster composition. (a) nominal user interface composition (b) extended composition (c) final rendering.

Figure 8:Digital cluster extended content.

Furthermore, the assistant suggestions could prompt the driver to start a dialog with the assistant. If the user is not familiar with the suggested feature or has a question about it, he/she can simply ask the assistant: “What is ACC?” or “Why isn’t XtraVue available?” The assistant feature can potentially bridge the knowledge gap between the user and the system, increasing the perception of intuitiveness, and promoting the usage of more complex and potentially underutilized vehicle features.

Conclusion

While there is no shortage of automotive user interface concepts created by the industry, vehicle design cycles, from concept to sale, can range from 3-5 years, and ultimately, cost is often valued above user experience making it difficult to quickly gauge the credibility of any particular concept. Even if a particular concept is well received, the long design cycle usually means that new products are kept confidential until after a vehicle is launched 3-5 years later. However, there is still value in reviewing the underlying design inspiration behind the Valeo MyMobius concept. Treating this concept as a case study, this paper examined to explore how to achieve an intuitive driving experience as the automotive industry undergoes the digitalization transition, moving from analog to digital interfaces and from physical buttons to multimodal interactions. Although an interface, by essence, cannot be intuitive, we know that people consider an interface as intuitive when the interface helps them to easily intuit how to complete their desired tasks. To achieve this, designers much understand users’ current knowledge point, predict where knowledge gaps might exist, and design to reduce potential physical and cognitive friction points in the user experience. Fortunately, most users have already experienced a digitalization shift through the evolution of smartphones, and this allows automotive designers to consider digital smartphone user knowledge, instead of the automotive user knowledge, as the new starting point for future digital automotive user interfaces. Considering the amount of time that users spend on their smartphones against the amount of time that users spend in their cars, visual interfaces, touch display gestures, and natural language interactions that mimic a smartphone’s usage will likely feel more natural and intuitive.

In the Valeo MyMobius concept, touch interaction, including swiping gestures, was supported through the addition of reconfigurable touch display steering wheel switches, and voice interaction was supported through a conversational voice assistant. In an attempt to minimize potential distractions and utilize the right interface for the right task, touch interaction was utilized for quick access to frequently used features or selections, while the voice assistant provided direct access to less frequently used features or selections, which might otherwise be buried deep in a menu structure. Correspondingly, to minimize visual distraction, the visual design aesthetic embodied Kenya Hara’s design philosophy of “Emptiness,” reducing visual clutter and creating spaces that are ready to receive inspiration and information.

Both the reconfigurable touch displays and the design of the predictive assistant promoted system learnability as a means to bridge potential knowledge gaps. As the user navigated the interface though touch display gestures, corresponding icons appeared on both the instrument cluster and the touch display, providing visual feedback to teach the user what action would be taken with the selection or confirmation click. The conversational assistant’s chat style interaction promoted a dialog between the vehicle and the driver. After learning a driver’s style and preferences, the assistant could contextually suggest that the driver activate underutilized driving assistance features, like Adaptive Cruise Control (ACC), allowing for one-click activation. Alternatively, if the driver was unfamiliar with the feature, the assistant provided a natural method to find out more information about the feature. Altogether, the Valeo MyMobius concept demonstrated an attainable future with today’s technologies, where the in-vehicle user experience can be personalized, contextual, and learnable, raising drivers’ awareness of their vehicles’ capabilities and achieving the perception of intuitiveness.

Supplementary Materials

Valeo MyMobius Concept YouTube marketing video: https://youtu.be/-tqTNmhF2nU Valeo XtraVue® Concept YouTube marketing video: https://youtu.be/akYpdIcMC2g

Author’s Contribution

Siav-Kuong Kuoch, Christopher Nowakowski and Katharina Hottelart were the primary authors of the paper. Siav-Kuong Kuoch and Pierre Escrieut were the primary designers who developed and implemented the MyMobius concept. Patrice Reilhac supervised and guided the team.

Conflicts of Interest

The concept described in this paper was conceived and implemented by the authors while employed by Valeo during the normal course of research and development. The authors declare no known conflicts of interest.

Meixner G, Häcker C, Decker B, Gerlach S, Hess A, et al. (2017) Automotive User Interfaces. Retrospective and Future Automotive Infotainment Systems - 100 Years of User Interface Evolution. Springer International Publishing, Switzerland. [ Ref ]

Reilhac P, Hottelart K, Diederichs F, Nowakowski C (2017) Automotive User Interfaces. User Experience with Increasing Levels of Vehicle Automation: Overview of the Challenges and Opportunities as Vehicles Progress from Partial to High Automation. Springer International Publishing, Switzerland. [ Ref ]

Reilhac P, Millett N, Hottelart K (2016) Road Vehicle Automation 3. Shifting Paradigms and Conceptual Frameworks for Automated Driving. Springer International Publishing, Switzerland. [ Ref ]

Singh S (2016) The Five Pillars of Digitisation That Will Transform The Automotive Industry. Forbes. [ Ref ]

Soko O (2018) Automotive Touch Panel Market Report - 2017. IHS Markit, 2017. [ Ref ]

Del-Colle A (2013) Carchaeology: 1986 Buick Riviera Introduces the Touchscreen. Popular Mechanics. [ Ref ]

Neff J (2012) BMW adds iDrive Touch and 3D maps to latest generation infotainment system. Autoblog.Com. [ Ref ]

Mateja J (1995) Oldsmobile’s $1995 Talking Map. Chicago Tribune. [ Ref ]

Reilhac P, Moizard J, Kaiser F, Hottelart K (2016) Cockpit Concept for Conditional Automated Driving. ATZ magazine 3: 42-46. [ Ref ]

Reilhac P, Millett N, Hottelart K (2015) Road Vehicle Automation 2. Thinking intuitive driving automation. Springer Verlag. [ Ref ]

Diederichs F, Bischoff S, Widlroither H, Reilhac P, Hottelart K, et al. (2015) New HMI concept for an intuitive automated driving experience and enhanced transitions. 7th Conference on Automotive User Interfaces and Interactive Vehicular Interactions. [ Ref ]

George P (2014) Infotainment Systems Are Too Damn Complicated. Jalopnik, 2014. [ Ref ]

Sackman J (2016) 10 Ways That Cars Are Becoming Too Complicated (And Annoying). Goliath, 2016. [ Ref ]

Spool JM (2018) What Makes a Design Seem Intuitive?. [ Ref ]

Mauney D, Le Hong S, Barkataki R, Vastamaki R, Fuchs F, et al. (2010) Cultural differences and similarities in the use of gestures on touchscreen user interfaces. International Usability Professionals Association (UPA) Conference, Munich. [ Ref ]

Babich N (2016) In-app Gestures and Mobile App Usability. Uxplanet. org. [ Ref ]

Krenn M (2014) A New Car UI - How Touch Screen Controls in Cars Should Really Work. [ Ref ]

National Highway Traffic Safety Administration (2013) Visual-Manual NHTSA Driver Distraction Guidelines for In-Vehicle Electronic Devices (Docket No. NHTSA-2010-0053), Washington, DC. [ Ref ]

Hara K (2016) Visualize the philosophy of MUJI. [ Ref ]